Introduction

Contents

Introduction¶

Welcome to the Neuromatch computational neuroscience course!

⚠ Experimental LLM-enhanced materials ⚠

This version of the computational neuroscience course incorporates Chatify 🤖 functionality. This is an experimental extension that adds support for a large language model-based helper to some of the tutorial materials. Instructions for using the Chatify extension are provided in the relevant tutorial notebooks. Note that using the extension may cause breaking changes and/or provide incorrect or misleading information.

Thanks for giving Chatify a try! Love it? Hate it? Either way, we’d love to hear from you about your Chatify experience! Please consider filling out our brief survey to provide feedback and help us make Chatify more awesome!

Orientation Video¶

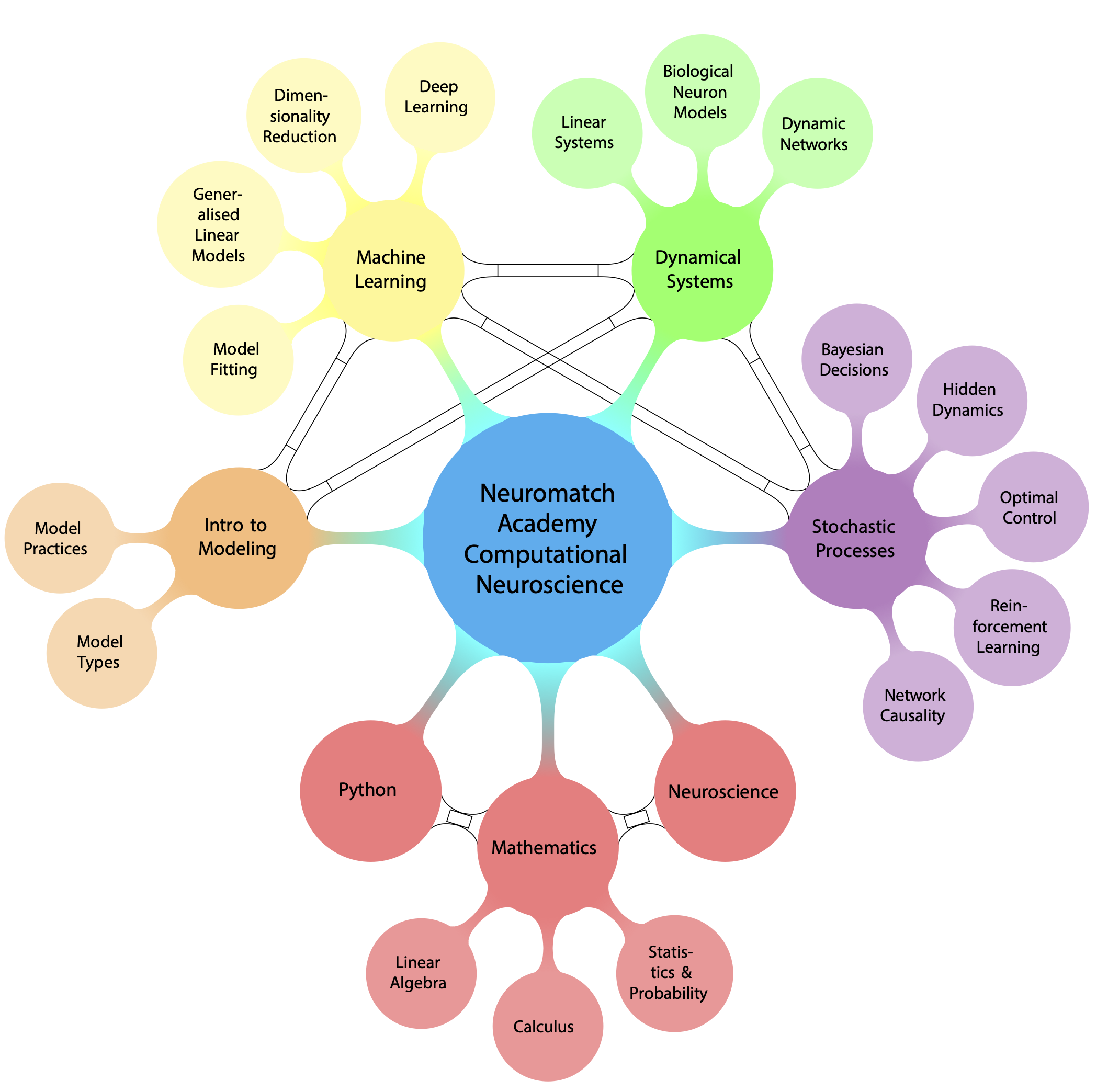

Curriculum overview¶

Welcome to the comp neuro course!

We have curated a curriculum that spans most areas of computational neuroscience (a hard task in an increasingly big field!). We will expose you to both theoretical modeling and more data-driven analyses. This section will overview the curriculum.

We will start with several optional pre-reqs refreshers.

The Neuro Video Series is a series of 12 videos that covers basic neuroscience concepts and neuroscience methods. These videos are completely optional and do not need to be watched in a fixed order so you can pick and choose which videos will help you brush up on your knowledge.

The pre-reqs refresher days are asynchronous, so you can go through the material on your own time. You will learn how to code in Python from scratch using a simple neural model, the leaky integrate-and-fire model, as a motivation. Then, you will cover linear algebra, calculus and probability & statistics. The topics covered on these days were carefully chosen based on what you need for the comp neuro course.

After this, it’s the start of the proper course! You will start out with the module on Intro to Modeling. On the first day, you’ll learn all about the broad types of questions we can ask with models in neuroscience (Model Types). You will learn that we can use models to ask what happens, how it happens, and why it happens in the brain. Importantly, we classify models into ‘what’, ‘how’, and ‘why’ models, not based on the toolkit used, but on the questions asked!

After this solid grounding in what questions you can ask with models and the process to start doing so, you will move to the module on Machine Learning. This module covers fitting models to data and using them to ask and answer questions in neuroscience. We can pose all sorts of questions (including what, how, and why questions) using machine learning — we focus especially on more data-driven analyses that often result in asking what is happening in the brain. You will learn about key principles behind fitting models to data (Model Fitting), how to use generalized linear models to fit encoding and decoding models (Generalized Linear Models), how to uncover underlying lower dimensional structure in data (Dimensionality Reduction), and how to use deep learning to build more complex encoding models, including comparing deep networks to the visual system (Deep Learning). You’ll then dive into projects and learn about the process of modeling using a step-by-step guide to modeling that you will apply to your own projects (Modeling Practice).

Next, you’ll move to the module on Dynamical Systems. In this module, you will learn all about dynamical systems and how to apply them to build more biologically plausible models of neurons and networks of neurons. In Linear Systems, you will cover a lot of the really foundational knowledge on dynamical systems that you will use throughout the rest of the course, including a brief dive into stochastic systems which will underlie the next module. During Biological Neuron Models, you will start to use this knowledge to build models of individual neurons that are more rooted in biology. In Dynamic Networks, you will extend upon the previous day to start building and analyzing networks of neurons. We will often ask ‘how’ questions using these models: how are things in the brain happening mechanistically? These models are often not directly fit to data (in contrast to the machine learning models), but instead are built based on bottom up knowledge of the system.

Next, you will move to the module on Stochastic Processes. We start with a day learning about Bayesian inference, within the context of making decisions (Bayesian Decisions). Specifically, we are learning about how to estimate a state of the world from measurements. In the next day, we extend this to include time: the state of the world is now changing over time (Hidden Dynamics). Next, we look at how we can take actions to affect the state of the world (Optimal Control and Reinforcement Learning). Once again, these models can be used as ‘what’, ‘how’, or ‘why’ models but we focus on asking ‘why’ questions (why should the brain compute this?).

Finally, we end with learning all about causality (Network Causality). This covers one of the most important science questions: when can we determine if something is causally related vs. just correlated?