Tutorial 1: Differentiation and Integration

Contents

Tutorial 1: Differentiation and Integration¶

Week 0, Day 4: Calculus

By Neuromatch Academy

Content creators: John S Butler, Arvind Kumar with help from Ella Batty

Content reviewers: Aderogba Bayo, Tessy Tom, Matt McCann

Production editors: Matthew McCann, Spiros Chavlis, Ella Batty

Tutorial Objectives¶

Estimated timing of tutorial: 80 minutes

In this tutorial, we will cover aspects of calculus that will be frequently used in the main NMA course. We assume that you have some familiarity with calculus but may be a bit rusty or may not have done much practice. Specifically, the objectives of this tutorial are

Get an intuitive understanding of derivative and integration operations

Learn to calculate the derivatives of 1- and 2-dimensional functions/signals numerically

Familiarize with the concept of the neuron transfer function in 1- and 2-dimensions.

Familiarize with the idea of numerical integration using the Riemann sum

Setup¶

Install dependencies¶

# @title Install dependencies

!pip install sympy --quiet

Install and import feedback gadget¶

# @title Install and import feedback gadget

!pip3 install vibecheck datatops --quiet

from vibecheck import DatatopsContentReviewContainer

def content_review(notebook_section: str):

return DatatopsContentReviewContainer(

"", # No text prompt

notebook_section,

{

"url": "https://pmyvdlilci.execute-api.us-east-1.amazonaws.com/klab",

"name": "neuromatch-precourse",

"user_key": "8zxfvwxw",

},

).render()

feedback_prefix = "W0D4_T1"

# Imports

import numpy as np

import scipy.optimize as opt # import root-finding algorithm

import sympy as sp # Python toolbox for symbolic maths

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D # Toolbox for rendring 3D figures

from mpl_toolkits import mplot3d # Toolbox for rendring 3D figures

Figure Settings¶

# @title Figure Settings

import logging

logging.getLogger('matplotlib.font_manager').disabled = True

import ipywidgets as widgets # interactive display

from ipywidgets import interact

%config InlineBackend.figure_format = 'retina'

# use NMA plot style

plt.style.use("https://raw.githubusercontent.com/NeuromatchAcademy/content-creation/main/nma.mplstyle")

my_layout = widgets.Layout()

fig_w, fig_h = 12, 4.5

my_fontsize = 16

my_params = {'axes.labelsize': my_fontsize,

'axes.titlesize': my_fontsize,

'figure.figsize': [fig_w, fig_h],

'font.size': my_fontsize,

'legend.fontsize': my_fontsize-4,

'lines.markersize': 8.,

'lines.linewidth': 2.,

'xtick.labelsize': my_fontsize-2,

'ytick.labelsize': my_fontsize-2}

plt.rcParams.update(my_params)

Plotting Functions¶

# @title Plotting Functions

def move_sympyplot_to_axes(p, ax):

backend = p.backend(p)

backend.ax = ax

backend.process_series()

backend.ax.spines['right'].set_color('none')

backend.ax.spines['bottom'].set_position('zero')

backend.ax.spines['top'].set_color('none')

plt.close(backend.fig)

def plot_functions(function, show_derivative, show_integral):

# For sympy we first define our symbolic variable

x, y, z, t, f = sp.symbols('x y z t f')

# We define our function

if function == 'Linear':

f = -2*t

name = r'$-2t$'

elif function == 'Parabolic':

f = t**2

name = r'$t^2$'

elif function == 'Exponential':

f = sp.exp(t)

name = r'$e^t$'

elif function == 'Sine':

f = sp.sin(t)

name = r'$sin(t)$'

elif function == 'Sigmoid':

f = 1/(1 + sp.exp(-(t-5)))

name = r'$\frac{1}{1+e^{-(t-5)}}$'

if show_derivative and not show_integral:

# Calculate the derivative of sin(t) as a function of t

diff_f = sp.diff(f)

print('Derivative of', f, 'is ', diff_f)

p1 = sp.plot(f, diff_f, show=False)

p1[0].line_color='r'

p1[1].line_color='b'

p1[0].label='Function'

p1[1].label='Derivative'

p1.legend=True

p1.title = 'Function = ' + name + '\n'

p1.show()

elif show_integral and not show_derivative:

int_f = sp.integrate(f)

int_f = int_f - int_f.subs(t, -10)

print('Integral of', f, 'is ', int_f)

p1 = sp.plot(f, int_f, show=False)

p1[0].line_color='r'

p1[1].line_color='g'

p1[0].label='Function'

p1[1].label='Integral'

p1.legend=True

p1.title = 'Function = ' + name + '\n'

p1.show()

elif show_integral and show_derivative:

diff_f = sp.diff(f)

print('Derivative of', f, 'is ', diff_f)

int_f = sp.integrate(f)

int_f = int_f - int_f.subs(t, -10)

print('Integral of', f, 'is ', int_f)

p1 = sp.plot(f, diff_f, int_f, show=False)

p1[0].line_color='r'

p1[1].line_color='b'

p1[2].line_color='g'

p1[0].label='Function'

p1[1].label='Derivative'

p1[2].label='Integral'

p1.legend=True

p1.title = 'Function = ' + name + '\n'

p1.show()

else:

p1 = sp.plot(f, show=False)

p1[0].line_color='r'

p1[0].label='Function'

p1.legend=True

p1.title = 'Function = ' + name + '\n'

p1.show()

def plot_alpha_func(t, f, df_dt):

plt.figure()

plt.subplot(2,1,1)

plt.plot(t, f, 'r', label='Alpha function')

plt.xlabel('Time (au)')

plt.ylabel('Voltage')

plt.title('Alpha function (f(t))')

#plt.legend()

plt.subplot(2,1,2)

plt.plot(t, df_dt, 'b', label='Derivative')

plt.title('Derivative of alpha function')

plt.xlabel('Time (au)')

plt.ylabel('df/dt')

#plt.legend()

def plot_charge_transfer(t, PSP, numerical_integral):

fig, axes = plt.subplots(1, 2)

axes[0].plot(t, PSP)

axes[0].set(xlabel = 't', ylabel = 'PSP')

axes[1].plot(t, numerical_integral)

axes[1].set(xlabel = 't', ylabel = 'Charge Transferred')

Section 0: Introduction¶

Video 1: Why do we care about calculus?¶

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Why_do_we_care_about_calculus_Video")

Section 1: What is differentiation and integration?¶

Video 2: A geometrical interpretation of differentiation and integration¶

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_A_geometrical_interpretation_of_differentiation_and_integration_Video")

This video covers the definition of differentiation and integration, highlights the geometrical interpretation of each, and introduces the idea of eigenfunctions.

Click here for text recap of video

Calculus is a part of mathematics concerned with continous change. There are two branches of calculus: differential calculus and integral calculus.

Differentiation of a function \(f(t)\) gives you the derivative of that function \(\frac{d(f(t))}{dt}\). A derivative captures how sensitive a function is to slight changes in the input for different ranges of inputs. Geometrically, the derivative of a function at a certain input is the slope of the function at that input. For example, as you drive, the distance traveled changes continuously with time. The derivative of the distance traveled with respect to time is the velocity of the vehicle at each point in time. The velocity tells you the rate of change of the distance traveled at different points in time. If you have slow velocity (a small derivative), the distance traveled doesn’t change much for small changes in time. A high velocity (big derivative) means that the distance traveled changes a lot for small changes in time.

The sign of the derivative of a function (or signal) tells whether the signal is increasing or decreasing. For a signal going through changes as a function of time, the derivative will become zero when the signal changes its direction of change (e.g. from increasing to decreasing). That is, at local minimum or maximum values, the slope of the signal will be zero. This property is used in optimizing problems. But we can also use it to find peaks in a signal.

Integration can be thought of as the reverse of differentation. If we integrate the velocity with respect to time, we can calculate the distance traveled. By integrating a function, we are basically trying to find functions that would have the original one as their derivative. When we integrate a function, our integral will have an added unknown scalar constant, \(C\). For example, if

our integral function \(f(t)\) will be:

This constant exists because the derivative of a constant is 0 so we cannot know what the constant should be. This is an indefinite integral. If we compute a definite integral, that is the integral between two limits of the input, we will not have this unknown constant and the integral of a function will capture the area under the curve of that function between those two limits.

Interactive Demo 1: Geometrical understanding¶

In the interactive demo below, you can pick different functions to examine in the drop-down menu. You can then choose to show the derivative function and/or the integral function.

For the integral, we have chosen the unknown constant \(C\) such that the integral function at the left x-axis limit is \(0\), as \(f(t = -10) = 0\). So the integral will reflect the area under the curve starting from that position.

For each function:

Examine just the function first. Discuss and predict what the derivative and integral will look like. Remember that derivative = slope of the function, integral = area under the curve from \(t = -10\) to that \(t\).

Check the derivative - does it match your expectations?

Check the integral - does it match your expectations?

Execute this cell to enable the widget

# @markdown Execute this cell to enable the widget

function_options = widgets.Dropdown(

options=['Linear', 'Exponential', 'Sine', 'Sigmoid'],

description='Function',

disabled=False,

)

derivative = widgets.Checkbox(

value=False,

description='Show derivative',

disabled=False,

indent=False

)

integral = widgets.Checkbox(

value=False,

description='Show integral',

disabled=False,

indent=False

)

def on_value_change(change):

derivative.value = False

integral.value = False

function_options.observe(on_value_change, names='value')

interact(plot_functions, function = function_options, show_derivative = derivative, show_integral = integral);

In the demo above, you may have noticed that the derivative and integral of the exponential function are the same as the exponential function itself.

When differentiated or integrated, some functions, like the exponential function, equal scalar times the same function. This is a similar idea to eigenvectors of a matrix being those that, when multiplied by the matrix, equal scalar times themselves, as you saw yesterday!

When

we say that \(f(t)\) is an eigenfunction for derivative operator, where \(a\) is a scaling factor. Similarly, when

we say that \(f(t)\) is an eigenfunction for integral operator.

As you can imagine, working with eigenfunctions can make mathematical analysis easy.

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Geometrical_understanding_Interactive_Demo")

Section 2: Analytical & Numerical Differentiation¶

Video 3: Differentiation¶

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Differentiation_Video")

In this section, we will delve into how we actually find the derivative of a function, both analytically and numerically.

When we find the derivative analytically, we obtain the exact formula for the derivative function.

To do this, instead of having to do some fancy math every time, we can often consult an online resource for a list of common derivatives, in this case, our trusty friend Wikipedia.

If I told you to find the derivative of \(f(t) = t^3\), you could consult that site and find in Section 2.1 that if \(f(t) = t^n\), then \(\frac{d(f(t))}{dt} = nt^{n-1}\). So you would be able to tell me that the derivative of \(f(t) = t^3\) is \(\frac{d(f(t))}{dt} = 3t^{2}\).

This list of common derivatives often contains only very simple functions. Luckily, as we’ll see in the next two sections, we can often break the derivative of a complex function down into the derivatives of more simple components.

Section 2.1.1: Product Rule¶

Sometimes we encounter functions which are the product of two functions that both depend on the variable. How do we take the derivative of such functions? For this we use the Product Rule.

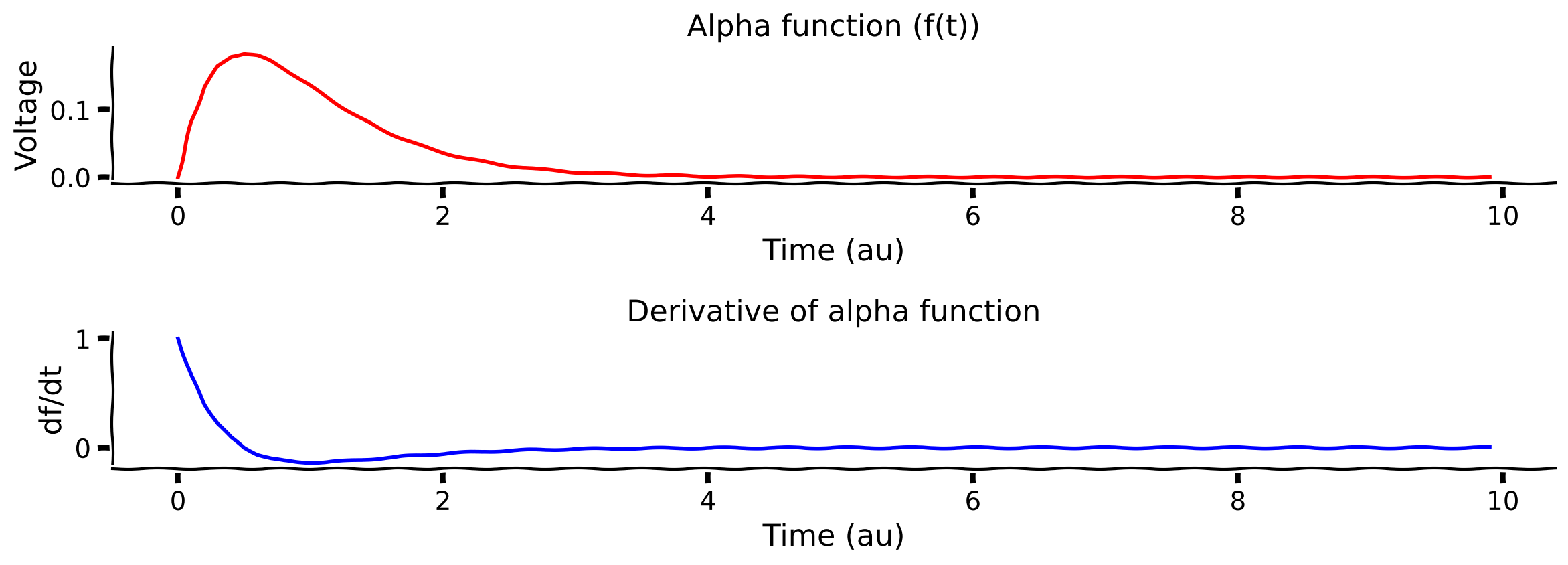

Coding Exercise 2.1.1: Derivative of the postsynaptic potential alpha function¶

Let’s use the product rule to get the derivative of the postsynaptic potential alpha function. As we saw in Video 3, the shape of the postsynaptic potential is given by the so-called alpha function:

Here \(f(t)\) is a product of \(t\) and \(\text{exp} \left(-\frac{t}{\tau} \right)\). So we can have \(u(t) = t\) and \(v(t) = \text{exp} \left( -\frac{t}{\tau} \right)\) and use the product rule!

We have defined \(u(t)\) and \(v(t)\) in the code below for the variable \(t\), an array of time steps from 0 to 10. Define \(\frac{du}{dt}\) and \(\frac{dv}{dt}\), then compute the full derivative of the alpha function using the product rule. You can always consult Wikipedia to figure out \(\frac{du}{dt}\) and \(\frac{dv}{dt}\)!

########################################################################

## TODO for students

## Complete all ... in code below and remove

raise NotImplementedError("Calculate the derivatives")

########################################################################

# Define time, time constant

t = np.arange(0, 10, .1)

tau = 0.5

# Compute alpha function

f = t * np.exp(-t/tau)

# Define u(t), v(t)

u_t = t

v_t = np.exp(-t/tau)

# Define du/dt, dv/dt

du_dt = ...

dv_dt = ...

# Define full derivative

df_dt = ...

# Visualize

plot_alpha_func(t, f, df_dt)

Example output:

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Derivative_of_the_postsynaptic_potential_alpha_function_Exercise")

Section 2.1.2: Chain Rule¶

We often encounter situations in which the variable \(a\) changes with time (\(t\)) and affects another variable \(r\). How can we estimate the derivative of \(r\) with respect to \(a\), i.e., \(\frac{dr}{da} = ?\)

To calculate \(\frac{dr}{da}\) we use the Chain Rule.

We calculate the derivative of both variables with respect to \(t\) and divide that derivative of \(r\) by that derivative of \(a\).

We can also use this formula to simplify taking derivatives of complex functions! We can make an arbitrary function \(t\) to compute more simple derivatives and multiply, as seen in this exercise.

Math Exercise 2.1.2: Chain Rule¶

Let’s say that:

What is \(\frac{dr}{da}\)? This is a more complex function, so we can’t simply consult a table of common derivatives. Can you use the chain rule to help?

Hint: We didn’t define \(t\), but you could set \(t\) equal to the function in the exponent.

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Chain_Rule_Math_Exercise")

Section 2.2.3: Derivatives in Python using SymPy¶

There is a useful Python library for getting the analytical derivatives of functions: SymPy. We actually used this in Interactive Demo 1, under the hood.

See the following cell for an example of setting up a SymPy function and finding the derivative.

# For sympy we first define our symbolic variables

f, t = sp.symbols('f, t')

# Function definition (sigmoid)

f = 1/(1 + sp.exp(-(t-5)))

# Get the derivative

diff_f = sp.diff(f)

# Print the resulting function

print('Derivative of', f, 'is ', diff_f)

Derivative of 1/(exp(5 - t) + 1) is exp(5 - t)/(exp(5 - t) + 1)**2

Section 2.2: Numerical Differentiation¶

Estimated timing to here from start of tutorial: 30 min

Formally, the derivative of a function \(\mathcal{f}(x)\) at any value \(a\) is given by the finite difference (FD) formula:

As \(h\rightarrow 0\), the \(FD\) approaches the actual value of the derivative. Let’s check this.

Note: The numerical estimate of the derivative will result in a time series whose length is one short of the original time series.

Interactive Demo 2.2: Numerical Differentiation of the Sine Function¶

Below, we find the numerical derivative of the sine function for different values of \(h\) and compare the result to the analytical solution.

What values of \(h\) result in more accurate numerical derivatives?

Execute this cell to enable the widget.

# @markdown *Execute this cell to enable the widget.*

def numerical_derivative_demo(h = 0.2):

# Now lets create a sequence of numbers which change according to the sine function

dt = 0.01

tx = np.arange(-10, 10, dt)

sine_fun = np.sin(tx)

# symbolic diffrentiation tells us that the derivative of sin(t) is cos(t)

cos_fun = np.cos(tx)

# Numerical derivative using difference formula

n_tx = np.arange(-10,10,h) # create new time axis

n_sine_fun = np.sin(n_tx) # calculate the sine function on the new time axis

sine_diff = (n_sine_fun[1:] - n_sine_fun[0:-1]) / h

fig = plt.figure()

ax = plt.subplot(111)

plt.plot(tx, sine_fun, label='sine function')

plt.plot(tx, cos_fun, label='analytical derivative of sine')

with plt.xkcd():

# notice that numerical derivative will have one element less

plt.plot(n_tx[0:-1], sine_diff, label='numerical derivative of sine')

plt.xlim([-10, 10])

plt.xlabel('Time (au)')

plt.ylabel('f(x) or df(x)/dt')

ax.legend(loc='upper center', bbox_to_anchor=(0.5, 1.05),

ncol=3, fancybox=True)

plt.show()

_ = widgets.interact(numerical_derivative_demo, h = (0.01, 0.5, .02))

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Numerical_Differentiation_of_the_Sine_Function_Interactive_Demo")

Section 2.3: Transfer Function and Gain of a Neuron¶

Estimated timing to here from start of tutorial: 34 min

When we inject a constant current (DC) into a neuron, its firing rate changes as a function of the strength of the injected current. This is called the input-output transfer function or just the transfer function or I/O Curve of the neuron. For most neurons, this can be approximated by a sigmoid function, e.g.:

where \(I\) is injected current, \(rate\) is the neuron firing rate and \(\eta\) is noise (Gaussian noise with zero mean and \(\sigma\) standard deviation).

You will visit this equation in a different context in Week 3

The slope of a neuron input-output transfer function, i.e., \(\frac{d(r(I))}{dI}\), is called the gain of the neuron, as it tells how the neuron output will change if the input is changed. In other words, the slope of the transfer function tells us in which range of inputs the neuron output is most sensitive to changes in its input.

Interactive Demo 2.3: Calculating the Transfer Function and Gain of a Neuron¶

In the following demo, you can estimate the gain of the following neuron transfer function using numerical differentiation. We will use our timestep as h. See the cell below for a function that computes the rate via the formula above and then the gain using numerical differentiation. In the following cell, you can play with the parameters \(a\) and \(\theta\) to change the shape of the transfer function (and see the resulting gain function). You can also set \(I_{mean}\) to see how the slope is computed for that value of I. In the left plot, the red vertical lines are the two values of the current being used to calculate the slope, while the blue lines point to the corresponding output firing rates.

Change the parameters of the neuron transfer function (i.e., \(a\) and \(\theta\)) and see if you can predict the value of \(I\) for which the neuron has a maximal slope and which parameter determines the peak value of the gain.

Ensure you understand how the right plot relates to the left!

How does \(\theta\) affect the transfer function and gain?

How does \(a\) affect the transfer function and gain?

def compute_rate_and_gain(I, a, theta, current_timestep):

""" Compute rate and gain of neuron based on parameters

Args:

I (ndarray): different possible values of the current

a (scalar): parameter of the transfer function

theta (scalar): parameter of the transfer function

current_timestep (scalar): the time we're using to take steps

Returns:

(ndarray, ndarray): rate and gain for each possible value of I

"""

# Compute rate

rate = (1+np.exp(-a*(I-theta)))**-1 - (1+np.exp(a*theta))**-1

# Compute gain using a numerical derivative

gain = (rate[1:] - rate[0:-1])/current_timestep

return rate, gain

Execute this cell to enable the widget

# @markdown Execute this cell to enable the widget

def plot_rate_and_gain(a, theta, I_mean):

current_timestep = 0.1

# Compute I

I = np.arange(0, 8, current_timestep)

rate, gain = compute_rate_and_gain(I, a, theta, current_timestep)

I_1 = I_mean - current_timestep/2

rate_1 = (1+np.exp(-a*(I_1-theta)))**-1 - (1+np.exp(a*theta))**-1

I_2 = I_mean + current_timestep/2

rate_2 = (1+np.exp(-a*(I_2-theta)))**-1 - (1+np.exp(a*theta))**-1

input_range = I_2-I_1

output_range = rate_2 - rate_1

# Visualize rate and gain

plt.subplot(1,2,1)

plt.plot(I,rate)

plt.plot([I_1,I_1],[0, rate_1],color='r')

plt.plot([0,I_1],[rate_1, rate_1],color='b')

plt.plot([I_2,I_2],[0, rate_2],color='r')

plt.plot([0,I_2],[rate_2, rate_2],color='b')

plt.xlim([0, 8])

low, high = plt.ylim()

plt.ylim([0, high])

plt.xlabel('Injected current (au)')

plt.ylabel('Output firing rate (normalized)')

plt.title('Transfer function')

plt.text(2, 1.3, 'Output-Input Ratio =' + str(np.round(1000*output_range/input_range)/1000), style='italic',

bbox={'facecolor': 'red', 'alpha': 0.5, 'pad': 10})

plt.subplot(1,2,2)

plt.plot(I[0:-1], gain)

plt.plot([I_mean, I_mean],[0,0.6],color='r')

plt.xlabel('Injected current (au)')

plt.ylabel('Gain')

plt.title('Gain')

plt.xlim([0, 8])

low, high = plt.ylim()

plt.ylim([0, high])

_ = widgets.interact(plot_rate_and_gain, a = (0.5, 2.0, .02), theta=(1.2,4.0,0.1), I_mean= (0.5,8.0,0.1))

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Calculating_the_transfer_function_and_gain_of_a_neuron_Interactive_Demo")

Section 3: Functions of Multiple Variables¶

Estimated timing to here from start of tutorial: 44 min

Video 4: Functions of multiple variables¶

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Functions_of_multiple_variables_Video")

This video covers what partial derivatives are.

Click here for text recap of video

In the previous section, you looked at function of single variable \(t\) or \(x\). In most cases, we encounter functions of multiple variables. For example, in the brain, the firing rate of a neuron is a function of both excitatory and inhibitory input rates. In the following, we will look into how to calculate derivatives of such functions.

When we take the derrivative of a multivariable function with respect to one of the variables it is called the partial derivative. For example if we have a function:

The we can define the partial derivatives as

In the above, the derivative of the last term (\(y^2\)) with respect to \(x\) is zero because it does not change with respect to \(x\). Similarly, the derivative of \(x^2\) with respect to \(y\) is also zero.

Just as with the derivatives we saw earlier, you can get partial derivatives through either an analytical method (finding an exact equation) or a numerical method (approximating).

Interactive Demo 3: Visualize partial derivatives¶

In the demo below, you can input any function of \(x\) and \(y\) and then visualize both the function and partial derivatives.

We visualized the 2-dimensional function as a surface plot in which the function values are rendered as color. Yellow represents a high value, and blue represents a low value. The height of the surface also shows the numerical value of the function. A complete description of 2D surface plots and why we need them can be found in Bonus Section 1.1. The first plot is that of our function. And the two bottom plots are the derivative surfaces with respect to \(x\) and \(y\) variables.

Ensure you understand how the plots relate to each other - if not, review the above material

Can you come up with a function where the partial derivative with respect to x will be a linear plane, and the derivative with respect to y will be more curvy?

What happens to the partial derivatives if no terms involve multiplying \(x\) and \(y\) together?

Execute this widget to enable the demo

# @markdown Execute this widget to enable the demo

# Let's use sympy to calculate Partial derivatives of a function of 2-variables

@interact(f2d_string = 'x**2 + 2*x*y + y**2')

def plot_partial_derivs(f2d_string):

f, x, y = sp.symbols('f, x, y')

f2d = eval(f2d_string)

f2d_dx = sp.diff(f2d,x)

f2d_dy = sp.diff(f2d,y)

print('Partial derivative of ', f2d, 'with respect to x is', f2d_dx)

print('Partial derivative of ', f2d, 'with respect to y is', f2d_dy)

p1 = sp.plotting.plot3d(f2d, (x, -5, 5), (y, -5, 5),show=True,xlabel='x', ylabel='y', zlabel='f(x,y)',title='Our function')

p2 = sp.plotting.plot3d(f2d_dx, (x, -5, 5), (y, -5, 5),show=True,xlabel='x', ylabel='y', zlabel='df(x,y)/dx',title='Derivative w.r.t. x')

p3 = sp.plotting.plot3d(f2d_dy, (x, -5, 5), (y, -5, 5),show=True,xlabel='x', ylabel='y', zlabel='df(x,y)/dy',title='Derivative w.r.t. y')

To see an application of the numerical calculation of partial derivatives to understand a neuron driven by excitatory and inhibitory inputs, see Bonus Section 1!

We will use the partial derivative several times in the course. For example partial derivative are used the calculate the Jacobian of a system of differential equations. The Jacobian is used to determine the dynamics and stability of a system. This will be introduced in the second week while studying the dynamics of excitatory and inhibitory population interactions.

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Visualize_partial_derivatives_Interactive_Demo")

Section 4: Numerical Integration¶

Video 5: Numerical Integration¶

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Numerical_Integration_Video")

This video covers numerical integration and specifically Riemann sums.

Click here for text recap of video

Geometrically, integration is the area under the curve. This interpretation gives two formal ways to calculate the integral of a function numerically.

Riemann sum: If we wish to integrate a function \(f(t)\) with respect to \(t\), then first we divide the function into \(n\) intervals of size \(dt = a-b\), where \(a\) is the starting of the interval. Thus, each interval gives a rectangle with height \(f(a)\) and width \(dt\). By summing the area of all the rectangles, we can approximate the area under the curve. As the size \(dt\) approaches to zero, our estimate of the integral approcahes the analytical calculation. Essentially, the Riemann sum is cutting the region under the curve in vertical stripes, calculating area of the each stripe and summing them up.

Section 4.1: Demonstration of the Riemann Sum¶

Estimated timing to here from start of tutorial: 60 min

Interactive Demo 4.1: Riemann Sum vs. Analytical Integral with changing step size¶

Below, we will compare numerical integration using the Riemann Sum with the analytical solution. You can change the interval size \(dt\) using the slider.

What values of dt result in the best numerical integration?

What is the downside of choosing that value of \(dt\)?

With large dt, why are we underestimating the integral (as opposed to overestimating?

Run this cell to enable the widget!

# @markdown Run this cell to enable the widget!

def riemann_sum_demo(dt = 0.5):

step_size = 0.1

min_val = 0.

max_val = 10.

tx = np.arange(min_val, max_val, step_size)

# Our function

ftn = tx**2 - tx + 1

# And the integral analytical formula calculates using sympy

int_ftn = tx**3/3 - tx**2/2 + tx

# Numerical integration of f(t) using Riemann Sum

n = int((max_val-min_val)/dt)

r_tx = np.zeros(n)

fun_value = np.zeros(n)

for ii in range(n):

a = min_val+ii*dt

fun_value[ii] = a**2 - a + 1

r_tx[ii] = a;

# Riemann sum is just cumulative sum of the fun_value multiplied by the

r_sum = np.cumsum(fun_value)*dt

with plt.xkcd():

plt.figure(figsize=(20,5))

ax = plt.subplot(1,2,1)

plt.plot(tx,ftn,label='Function')

for ii in range(n):

plt.plot([r_tx[ii], r_tx[ii], r_tx[ii]+dt, r_tx[ii]+dt], [0, fun_value[ii], fun_value[ii], 0] ,color='r')

plt.xlabel('Time (au)')

plt.ylabel('f(t)')

plt.title('f(t)')

plt.grid()

plt.subplot(1,2,2)

plt.plot(tx,int_ftn,label='Analytical')

plt.plot(r_tx+dt,r_sum,color = 'r',label='Riemann Sum')

plt.xlabel('Time (au)')

plt.ylabel('int(f(t))')

plt.title('Integral of f(t)')

plt.grid()

plt.legend()

plt.show()

_ = widgets.interact(riemann_sum_demo, dt = (0.1, 1., .02))

There are other methods of numerical integration, such as Lebesgue integral and Runge Kutta. In the Lebesgue integral, we divide the area under the curve into horizontal stripes. That is, instead of the independent variable, the range of the function \(f(t)\) is divided into small intervals. In any case, the Riemann sum is the basis of Euler’s integration method for solving ordinary differential equations - something you will do in a later tutorial today.

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Riemann_Sum_vs_Analytical_Integral_with_changing_step_size_Interactive_Demo")

Section 4.2: Neural Applications of Numerical Integration¶

Estimated timing to here from start of tutorial: 68 min

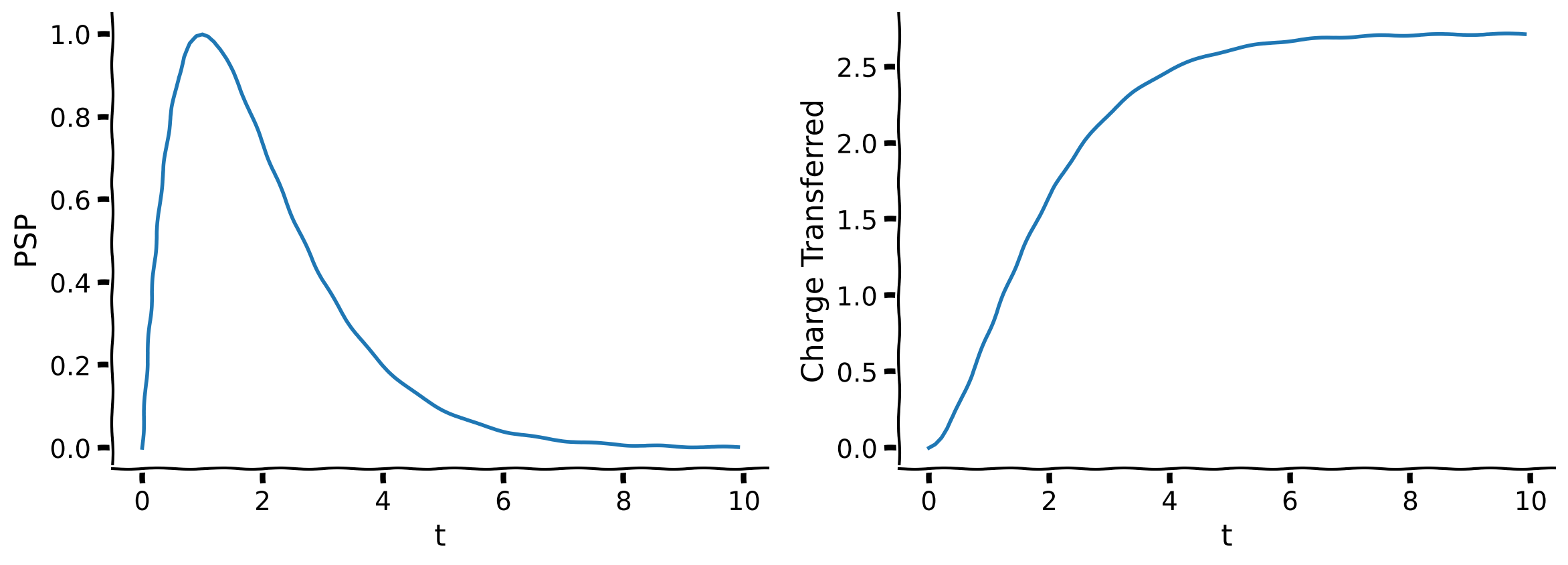

Coding Exercise 4.2: Calculating Charge Transfer with Excitatory Input¶

An incoming spike elicits a change in the post-synaptic membrane potential (PSP), which can be captured by the following function:

where \(J\) is the synaptic amplitude, \(t_{sp}\) is the spike time and \(\tau_s\) is the synaptic time constant.

Estimate the total charge transferred to the postsynaptic neuron during a PSP with amplitude \(J=1.0\), \(\tau_s = 1.0\) and \(t_{sp} = 1\) (that is the spike occurred at \(1\) ms). The total charge will be the integral of the PSP function.

########################################################################

## TODO for students

## Complete all ... in code below and remove

raise NotImplementedError("Calculate the charge transfer")

########################################################################

# Set up parameters

J = 1

tau_s = 1

t_sp = 1

dt = .1

t = np.arange(0, 10, dt)

# Code PSP formula

PSP = ...

# Compute numerical integral

# We already have PSP at every time step (height of rectangles). We need to

#. multiply by width of rectangles (dt) to get areas

rectangle_areas = ...

# Cumulatively sum rectangles (hint: use np.cumsum)

numerical_integral = ...

# Visualize

plot_charge_transfer(t, PSP, numerical_integral)

Example output:

You can see from the figure that the total charge transferred is a little over 2.5.

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Calculating_Charge_Transfer_with_Excitatory_Input_exercise")

Section 5: Differentiation and Integration as Filtering Operations¶

Estimated timing to here from start of tutorial: 75 min

Video 6: Filtering Operations¶

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Filtering_Operations_Video")

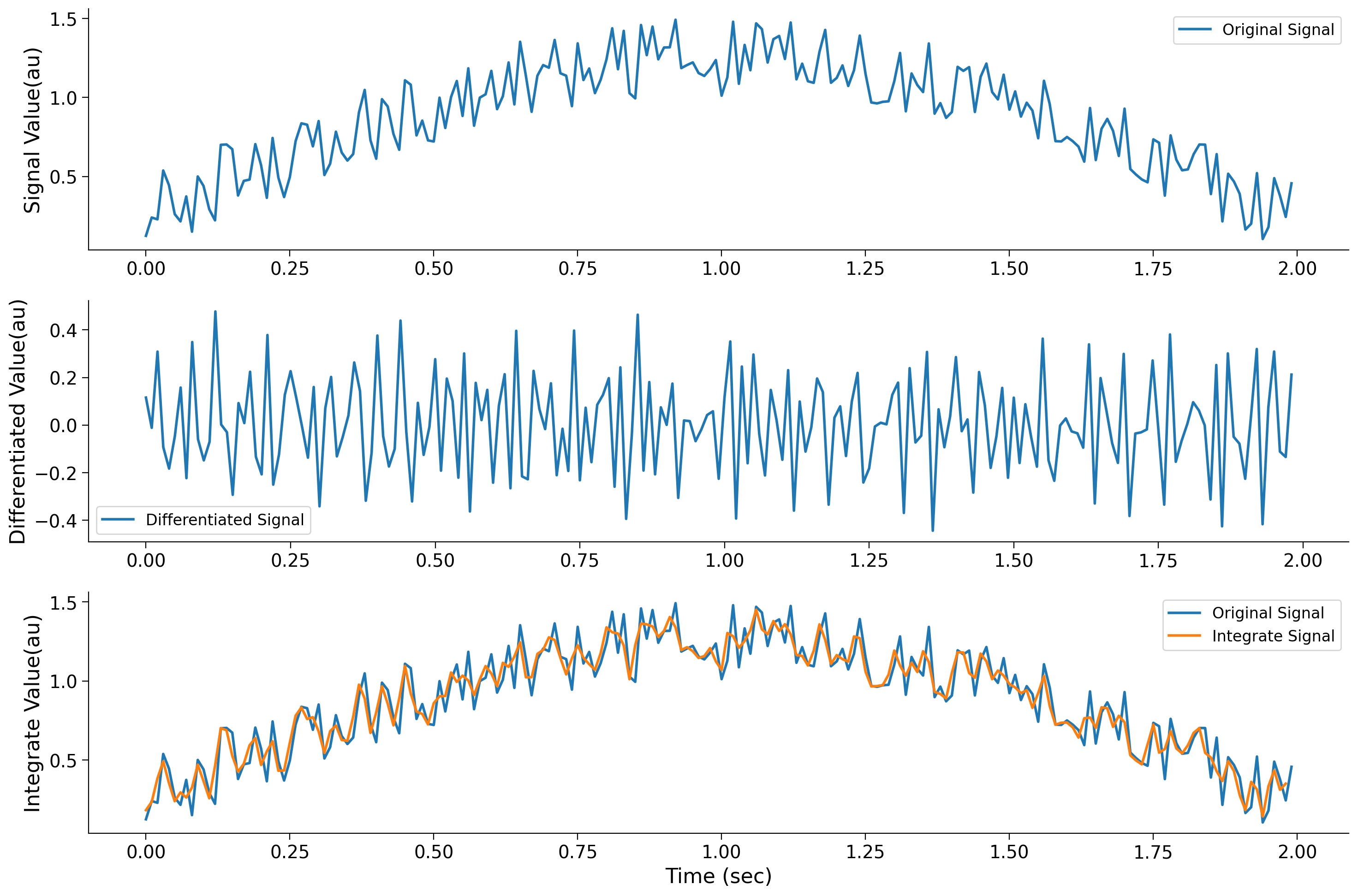

This video covers a different interpretation of differentiation and integration: viewing them as filtering operations.

Click here for text recap of video

In the above, we used the notions that geometrically integration is the area under the curve and differentiation is the slope of the curve. There is another interpretation of these two operations.As we calculate the derivative of a function, we take the difference of adjacent values of the function. This results in the removal of common part between the two values. As a consequence, we end up removing the unchanging part of the signal. If we now think in terms of frequencies, differentiation removes low frequencies, or slow changes. That is, differentiation acts as a high pass filter.

Integration does the opposite because in the estimation of an integral we keep adding adjacent values of the signal. So, again thinking in terms of frequencies, integration is akin to the removal of high frequencies or fast changes (low-pass filter). The shock absorbers in your bike are an example of integrators.

We can see this behavior the demo below. Here we will not work with functions, but with signals. As such, functions and signals are the same. Just that in most cases our signals are measurements with respect to time.

Execute this cell to see visualization

# @markdown Execute this cell to see visualization

h = 0.01

tx = np.arange(0,2,h)

noise_signal = np.random.uniform(0, 1, (len(tx)))*0.5

x1 = np.sin(0.5*np.pi*tx) + noise_signal # This will generate a 1 Hz sin wave

# In the signal x1 we have added random noise which contributs the high frequencies

# Take the derivative equivalent of the signal i.e. subtract the adjacent values

x1_diff = (x1[1:] - x1[:-1])

# Take the integration equivalent of the signal i.e. sum the adjacent values. And divide by 2 (take average essentially)

x1_integrate = (x1[1:] + x1[:-1])/2

# Plotting code

plt.figure(figsize=(15,10))

plt.subplot(3,1,1)

plt.plot(tx,x1,label='Original Signal')

#plt.xlabel('Time (sec)')

plt.ylabel('Signal Value(au)')

plt.legend()

plt.subplot(3,1,2)

plt.plot(tx[0:-1],x1_diff,label='Differentiated Signal')

# plt.xlabel('Time (sec)')

plt.ylabel('Differentiated Value(au)')

plt.legend()

plt.subplot(3,1,3)

plt.plot(tx,x1,label='Original Signal')

plt.plot(tx[0:-1],x1_integrate,label='Integrate Signal')

plt.xlabel('Time (sec)')

plt.ylabel('Integrate Value(au)')

plt.legend()

<matplotlib.legend.Legend at 0x7f6c7d094370>

Notice how the differentiation operation amplifies the fast changes which were contributed by noise. By contrast, the integration operation suppresses the fast-changing noise. We will further smooth the signal if we perform the same operation of averaging the adjacent samples on the orange trace. Such sums and subtractions form the basis of digital filters.

Summary¶

Estimated timing of tutorial: 80 minutes

Geometrically, integration is the area under the curve, and differentiation is the slope of the function

The concepts of slope and area can be easily extended to higher dimensions. We saw this when we took the derivative of a 2-dimensional transfer function of a neuron

Numerical estimates of both derivatives and integrals require us to choose a time step \(h\). The smaller the \(h\), the better the estimate, but more computations are needed for small values of \(h\). So there is always some tradeoff

Partial derivatives are just the estimate of the slope along one of the many dimensions of the function. We can combine the slopes in different directions using vector sum to find the direction of the slope

Because the derivative of a function is zero at the local peak or trough, derivatives are used to solve optimization problems

When thinking of signal, integration operation is equivalent to smoothening the signals (i.e., removing fast changes)

Differentiation operations remove slow changes and enhance the high-frequency content of a signal

Bonus Section 1: Numerical calculation of partial derivatives¶

Bonus Section 1.1: Understanding 2D plots¶

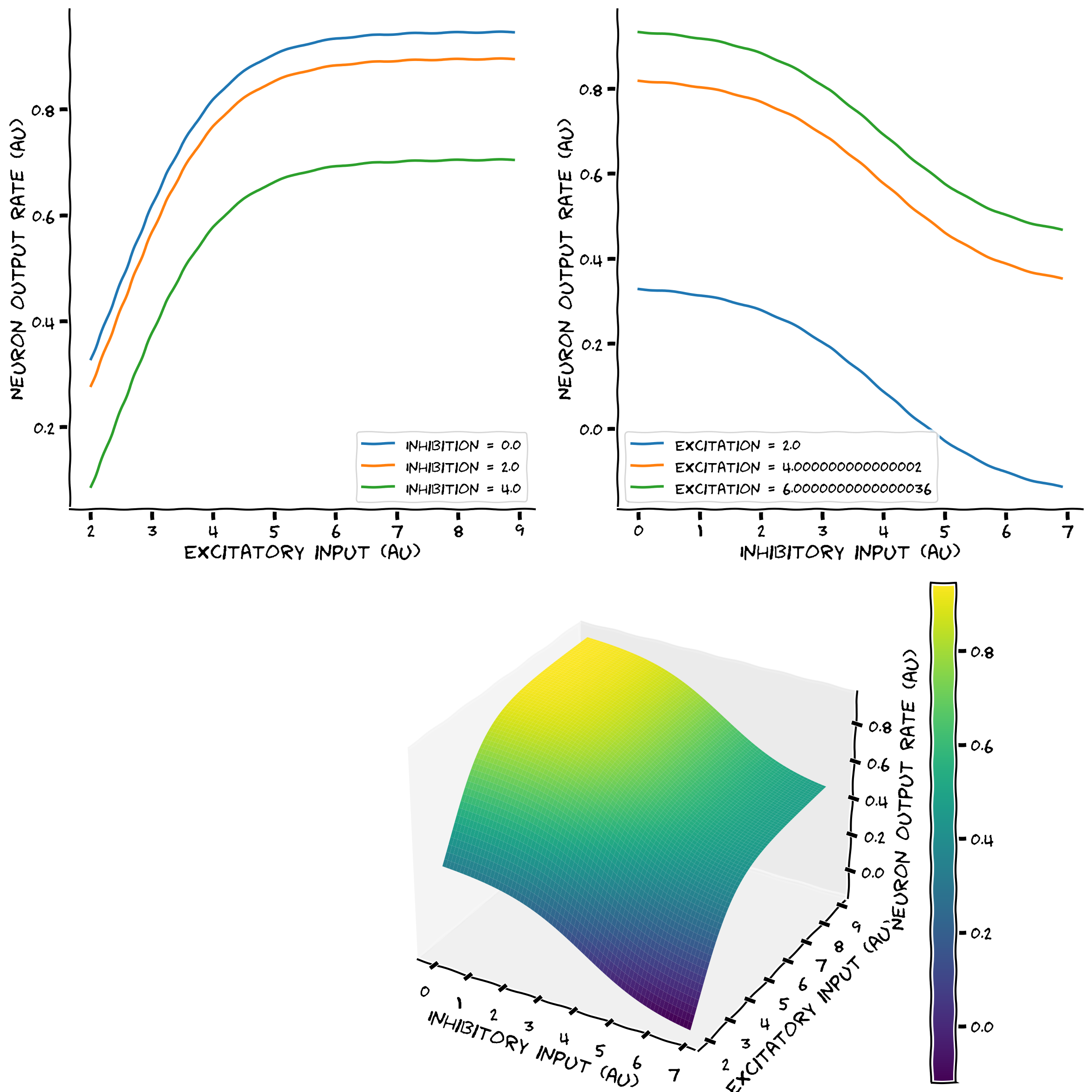

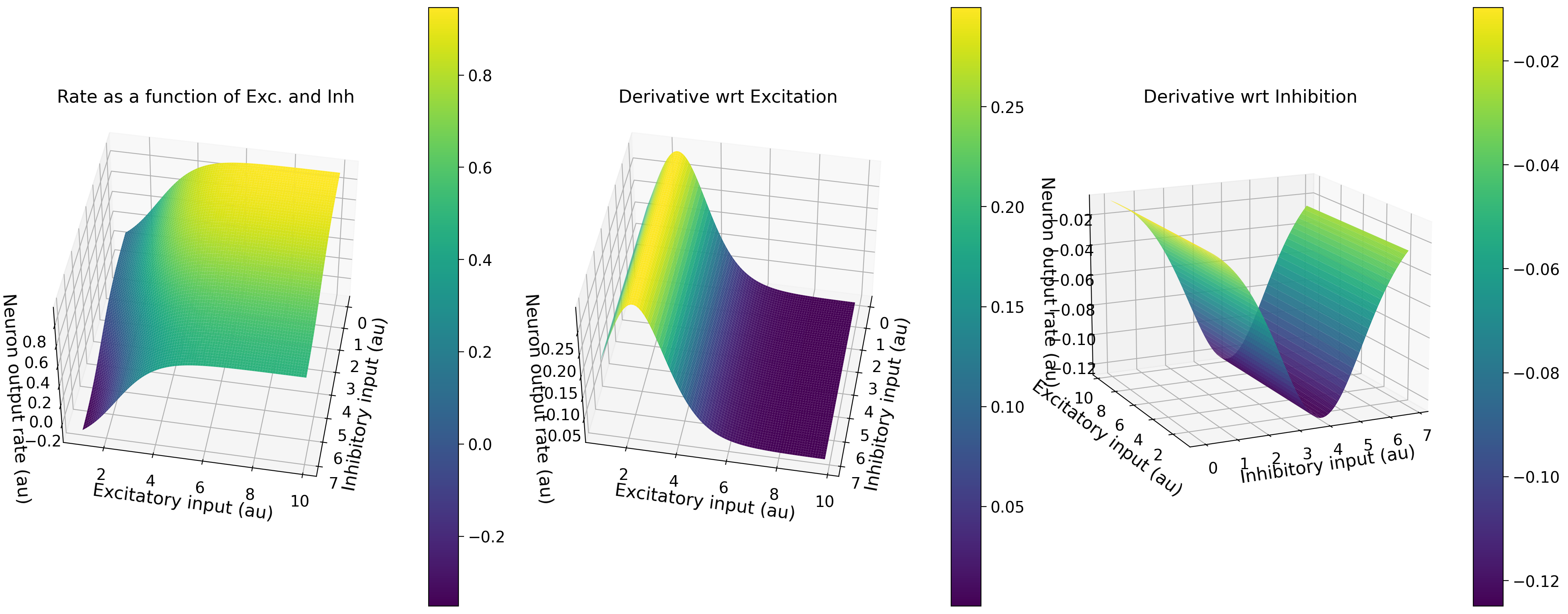

Let’s take the example of a neuron driven by excitatory and inhibitory inputs. Because this is for illustrative purposes, we will not go into the details of the numerical range of the input and output variables.

In the function below, we assume that the firing rate of a neuron increases monotonically with an increase in excitation and decreases monotonically with an increase in inhibition. The inhibition is modeled as a subtraction. As for the 1-dimensional transfer function, we assume that we can approximate the transfer function as a sigmoid function.

We can use the same numerical differentiation as before to evaluate the partial derivatives, but now we apply it to each row and column separately.

Execute this cell to visualize the neuron firing rate surface

# @markdown Execute this cell to visualize the neuron firing rate surface

def sigmoid_function(x,a,theta):

'''

Population activation function.

Expects:

x : the population input

a : the gain of the function

theta : the threshold of the function

Returns:

the population activation response F(x) for input x

'''

# add the expression of f = F(x)

f = (1+np.exp(-a*(x-theta)))**-1 - (1+np.exp(a*theta))**-1

return f

# Neuron Transfer function

step_size = 0.1

exc_input = np.arange(2,9,step_size)

inh_input = np.arange(0,7,step_size)

exc_a = 1.2

exc_theta = 2.4

inh_a = 1.

inh_theta = 4.

rate = np.zeros((len(exc_input),len(inh_input)))

for ii in range(len(exc_input)):

for jj in range(len(inh_input)):

rate[ii,jj] = sigmoid_function(exc_input[ii],exc_a,exc_theta) - sigmoid_function(inh_input[jj],inh_a,inh_theta)*0.5

with plt.xkcd():

X, Y = np.meshgrid(exc_input, inh_input)

fig = plt.figure(figsize=(12,12))

ax1 = fig.add_subplot(2,2,1)

lg_txt = 'Inhibition = ' + str(inh_input[0])

ax1.plot(exc_input,rate[:,0],label=lg_txt)

lg_txt = 'Inhibition = ' + str(inh_input[20])

ax1.plot(exc_input,rate[:,20],label=lg_txt)

lg_txt = 'Inhibition = ' + str(inh_input[40])

ax1.plot(exc_input,rate[:,40],label=lg_txt)

ax1.legend()

ax1.set_xlabel('Excitatory input (au)')

ax1.set_ylabel('Neuron output rate (au)');

ax2 = fig.add_subplot(2,2,2)

lg_txt = 'Excitation = ' + str(exc_input[0])

ax2.plot(inh_input,rate[0,:],label=lg_txt)

lg_txt = 'Excitation = ' + str(exc_input[20])

ax2.plot(inh_input,rate[20,:],label=lg_txt)

lg_txt = 'Excitation = ' + str(exc_input[40])

ax2.plot(inh_input,rate[40,:],label=lg_txt)

ax2.legend()

ax2.set_xlabel('Inhibitory input (au)')

ax2.set_ylabel('Neuron output rate (au)');

ax3 = fig.add_subplot(2, 1, 2, projection='3d')

surf= ax3.plot_surface(Y.T, X.T, rate, rstride=1, cstride=1,

cmap='viridis', edgecolor='none')

ax3.set_xlabel('Inhibitory input (au)')

ax3.set_ylabel('Excitatory input (au)')

ax3.set_zlabel('Neuron output rate (au)');

fig.colorbar(surf)

The Top-Left plot shows how the neuron output rate increases as a function of excitatory input (e.g., the blue trace). However, as we increase inhibition, expectedly, the neuron output decreases, and the curve is shifted downwards. This constant shift in the curve suggests that the effect of inhibition is subtractive, and the amount of subtraction does not depend on the neuron output.

We can alternatively see how the neuron output changes with respect to inhibition and study how excitation affects that. This is visualized in the Top-Right plot.

This type of plotting is very intuitive, but it becomes very tedious to visualize when there are larger numbers of lines to be plotted. A nice solution to this visualization problem is to render the data as color, as surfaces, or both.

This is what we have done in the plot at the bottom. The color map on the right shows the neuron’s output as a function of inhibitory and excitatory input. The output rate is shown both as height along the z-axis and as the color. Blue means a low firing rate, and yellow represents a high firing rate (see the color bar).

In the above plot, the output rate of the neuron goes below zero. This is, of course, not physiological, as neurons cannot have negative firing rates. In models, we either choose the operating point so that the output does not go below zero, or we clamp the neuron output to zero if it goes below zero. You will learn about it more in Week 2.

Bonus Section 1.2: Numerical partial derivatives¶

We can now compute the partial derivatives of our transfer function in response to excitatory and inhibitory input. We do so below!

Execute this cell implement our neural transfer function, plot_2d_neuron_transfer_function, in respond to excitatory and inhibitory input

# @markdown Execute this cell implement our neural transfer function, `plot_2d_neuron_transfer_function`, in respond to excitatory and inhibitory input

def plot_2d_neuron_transfer_function(exc_a, exc_theta, inh_a, inh_theta):

# Neuron Transfer Function

step_size = 0.1

exc_input = np.arange(1,10,step_size)

inh_input = np.arange(0,7,step_size)

rate = np.zeros((len(exc_input),len(inh_input)))

for ii in range(len(exc_input)):

for jj in range(len(inh_input)):

rate[ii,jj] = sigmoid_function(exc_input[ii],exc_a,exc_theta) - sigmoid_function(inh_input[jj],inh_a,inh_theta)*0.5

# Derivative with respect to excitatory input rate

rate_de = np.zeros((len(exc_input)-1,len(inh_input)))# this will have one row less than the rate matrix

for ii in range(len(inh_input)):

rate_de[:,ii] = (rate[1:,ii] - rate[0:-1,ii])/step_size

# Derivative with respect to inhibitory input rate

rate_di = np.zeros((len(exc_input),len(inh_input)-1))# this will have one column less than the rate matrix

for ii in range(len(exc_input)):

rate_di[ii,:] = (rate[ii,1:] - rate[ii,0:-1])/step_size

X, Y = np.meshgrid(exc_input, inh_input)

fig = plt.figure(figsize=(20,8))

ax1 = fig.add_subplot(1, 3, 1, projection='3d')

surf1 = ax1.plot_surface(Y.T, X.T, rate, rstride=1, cstride=1, cmap='viridis', edgecolor='none')

ax1.set_xlabel('Inhibitory input (au)')

ax1.set_ylabel('Excitatory input (au)')

ax1.set_zlabel('Neuron output rate (au)')

ax1.set_title('Rate as a function of Exc. and Inh');

ax1.view_init(45, 10)

fig.colorbar(surf1)

Xde, Yde = np.meshgrid(exc_input[0:-1], inh_input)

ax2 = fig.add_subplot(1, 3, 2, projection='3d')

surf2 = ax2.plot_surface(Yde.T, Xde.T, rate_de, rstride=1, cstride=1, cmap='viridis', edgecolor='none')

ax2.set_xlabel('Inhibitory input (au)')

ax2.set_ylabel('Excitatory input (au)')

ax2.set_zlabel('Neuron output rate (au)');

ax2.set_title('Derivative wrt Excitation');

ax2.view_init(45, 10)

fig.colorbar(surf2)

Xdi, Ydi = np.meshgrid(exc_input, inh_input[:-1])

ax3 = fig.add_subplot(1, 3, 3, projection='3d')

surf3 = ax3.plot_surface(Ydi.T, Xdi.T, rate_di, rstride=1, cstride=1, cmap='viridis', edgecolor='none')

ax3.set_xlabel('Inhibitory input (au)')

ax3.set_ylabel('Excitatory input (au)')

ax3.set_zlabel('Neuron output rate (au)');

ax3.set_title('Derivative wrt Inhibition');

ax3.view_init(15, -115)

fig.colorbar(surf3)

plot_2d_neuron_transfer_function(exc_a=1.2, exc_theta=2.4, inh_a=1, inh_theta=4)

Is this what you expected? Change the parameters in the function to generate the 2-D transfer function of the neuron for different excitatory and inhibitory \(a\) and \(\theta\) and test your intuitions. Can you relate this shape of the partial derivative surface to the gain of the 1-D transfer function of a neuron (Section 2)?

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Numerical_partial_derivatives_Bonus_Discussion")