Tutorial 2: Markov Processes

Contents

Tutorial 2: Markov Processes¶

Week 2, Day 2: Linear Systems

By Neuromatch Academy

Content Creators: Bing Wen Brunton, Ellie Stradquist

Content Reviewers: Norma Kuhn, Karolina Stosio, John Butler, Matthew Krause, Ella Batty, Richard Gao, Michael Waskom, Ethan Cheng

Production editors: Gagana B, Spiros Chavlis

Tutorial Objectives¶

Estimated timing of tutorial: 45 minutes

In this tutorial, we will look at the dynamical systems introduced in the first tutorial through a different lens.

In Tutorial 1, we studied dynamical systems as a deterministic process. For Tutorial 2, we will look at probabilistic dynamical systems. You may sometimes hear these systems called stochastic. In a probabilistic process, elements of randomness are involved. Every time you observe some probabilistic dynamical system, starting from the same initial conditions, the outcome will likely be different. Put another way, dynamical systems that involve probability will incorporate random variations in their behavior.

For some probabilistic dynamical systems, the differential equations express a relationship between \(\dot{x}\) and \(x\) at every time \(t\), so that the direction of \(x\) at every time depends entirely on the value of \(x\). Said a different way, knowledge of the value of the state variables \(x\) at time t is all the information needed to determine \(\dot{x}\) and therefore \(x\) at the next time.

This property — that the present state entirely determines the transition to the next state — is what defines a Markov process and systems obeying this property can be described as Markovian.

The goal of Tutorial 2 is to consider this type of Markov process in a simple example where the state transitions are probabilistic. In particular, we will:

Understand Markov processes and history dependence.

Explore the behavior of a two-state telegraph process and understand how its equilibrium distribution is dependent on its parameters.

Setup¶

⚠ Experimental LLM-enhanced tutorial ⚠

This notebook includes Neuromatch’s experimental Chatify 🤖 functionality. The Chatify notebook extension adds support for a large language model-based “coding tutor” to the materials. The tutor provides automatically generated text to help explain any code cell in this notebook.

Note that using Chatify may cause breaking changes and/or provide incorrect or misleading information. If you wish to proceed by installing and enabling the Chatify extension, you should run the next two code blocks (hidden by default). If you do not want to use this experimental version of the Neuromatch materials, please use the stable materials instead.

To use the Chatify helper, insert the %%explain magic command at the start of any code cell and then run it (shift + enter) to access an interface for receiving LLM-based assitance. You can then select different options from the dropdown menus depending on what sort of assitance you want. To disable Chatify and run the code block as usual, simply delete the %%explain command and re-run the cell.

Note that, by default, all of Chatify’s responses are generated locally. This often takes several minutes per response. Once you click the “Submit request” button, just be patient– stuff is happening even if you can’t see it right away!

Thanks for giving Chatify a try! Love it? Hate it? Either way, we’d love to hear from you about your Chatify experience! Please consider filling out our brief survey to provide feedback and help us make Chatify more awesome!

Run the next two cells to install and configure Chatify…

%pip install -q davos

import davos

davos.config.suppress_stdout = True

Note: you may need to restart the kernel to use updated packages.

smuggle chatify # pip: git+https://github.com/ContextLab/chatify.git

%load_ext chatify

Downloading and initializing model; this may take a few minutes...

llama.cpp: loading model from /home/runner/.cache/huggingface/hub/models--TheBloke--Llama-2-7B-Chat-GGML/snapshots/501a3c8182cd256a859888fff4e838c049d5d7f6/llama-2-7b-chat.ggmlv3.q5_1.bin

llama_model_load_internal: format = ggjt v3 (latest)

llama_model_load_internal: n_vocab = 32000

llama_model_load_internal: n_ctx = 512

llama_model_load_internal: n_embd = 4096

llama_model_load_internal: n_mult = 256

llama_model_load_internal: n_head = 32

llama_model_load_internal: n_layer = 32

llama_model_load_internal: n_rot = 128

llama_model_load_internal: freq_base = 10000.0

llama_model_load_internal: freq_scale = 1

llama_model_load_internal: ftype = 9 (mostly Q5_1)

llama_model_load_internal: n_ff = 11008

llama_model_load_internal: model size = 7B

llama_model_load_internal: ggml ctx size = 0.08 MB

llama_model_load_internal: mem required = 6390.60 MB (+ 1026.00 MB per state)

AVX = 1 | AVX2 = 1 | AVX512 = 1 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 1 | VSX = 0 | llama_new_context_with_model: kv self size = 256.00 MB

Install and import feedback gadget¶

# @title Install and import feedback gadget

!pip3 install vibecheck datatops --quiet

from vibecheck import DatatopsContentReviewContainer

def content_review(notebook_section: str):

return DatatopsContentReviewContainer(

"", # No text prompt

notebook_section,

{

"url": "https://pmyvdlilci.execute-api.us-east-1.amazonaws.com/klab",

"name": "neuromatch_cn",

"user_key": "y1x3mpx5",

},

).render()

feedback_prefix = "W2D2_T2"

# Imports

import numpy as np

import matplotlib.pyplot as plt

Figure Settings¶

# @title Figure Settings

import logging

logging.getLogger('matplotlib.font_manager').disabled = True

import ipywidgets as widgets # interactive display

%config InlineBackend.figure_format = 'retina'

plt.style.use("https://raw.githubusercontent.com/NeuromatchAcademy/course-content/main/nma.mplstyle")

Plotting Functions¶

# @title Plotting Functions

def plot_switch_simulation(t, x):

fig = plt.figure()

plt.plot(t, x)

plt.title('State-switch simulation')

plt.xlabel('Time')

plt.xlim((0, 300)) # zoom in time

plt.ylabel('State of ion channel 0/1', labelpad=-60)

plt.yticks([0, 1], ['Closed (0)', 'Open (1)'])

plt.show()

return

def plot_interswitch_interval_histogram(inter_switch_intervals):

fig = plt.figure()

plt.hist(inter_switch_intervals)

plt.title('Inter-switch Intervals Distribution')

plt.ylabel('Interval Count')

plt.xlabel('time')

plt.show()

def plot_state_probabilities(time, states):

fig = plt.figure()

plt.plot(time, states[:,0], label='Closed to open')

plt.plot(time, states[:,1], label='Open to closed')

plt.legend()

plt.xlabel('time')

plt.ylabel('prob(open OR closed)')

Section 1: Telegraph Process¶

Video 1: Markov Process¶

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Markov_Process_Video")

This video covers a definition of Markov processes and an introduction to ion channels opening/closing as an example of a telegraph process.

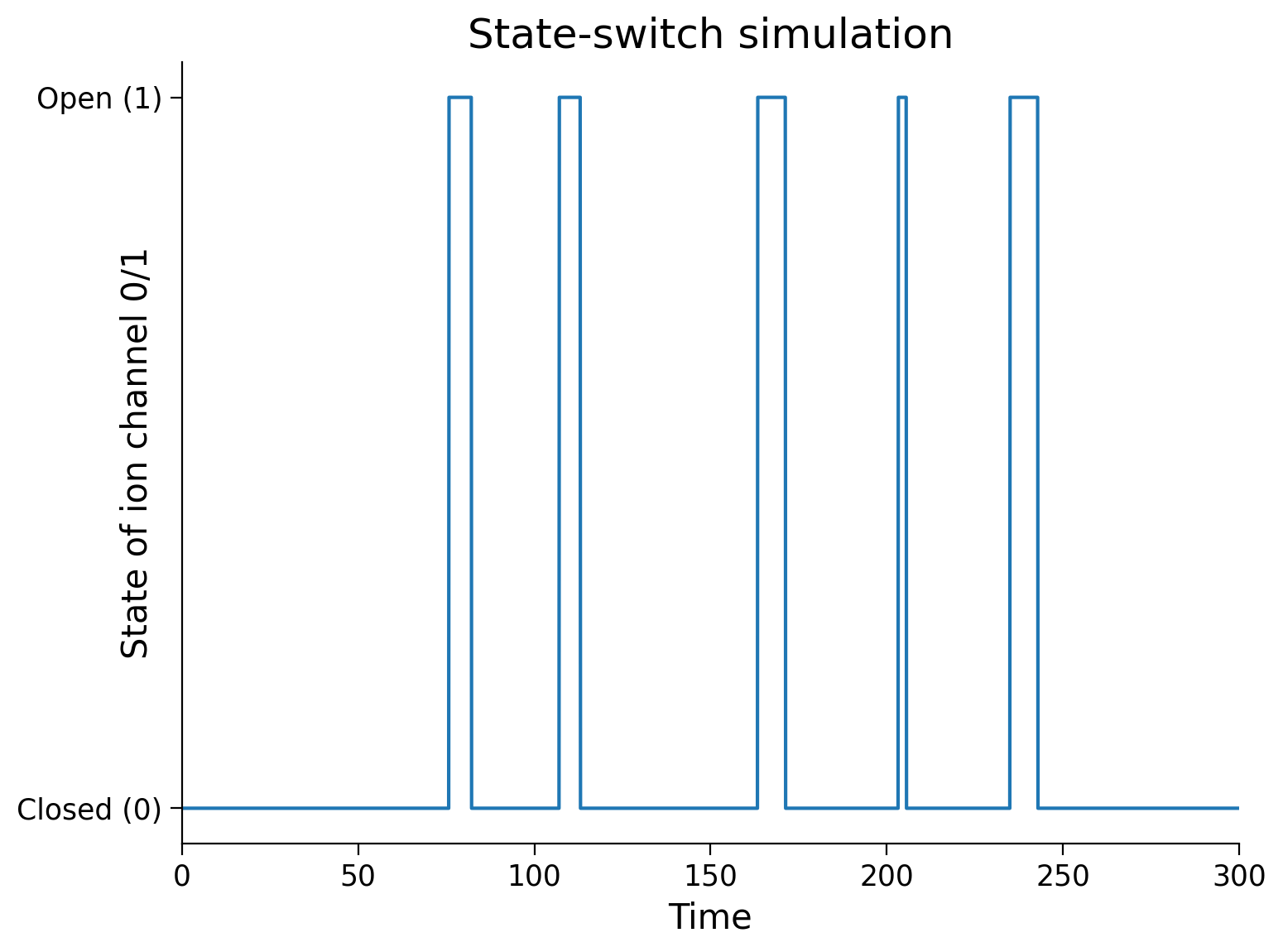

Let’s consider a Markov process with two states, where switches between each two states are probabilistic (known as a telegraph process). To be concrete, let’s say we are modeling an ion channel in a neuron that can be in one of two states: Closed (0) or Open (1).

If the ion channel is Closed, it may transition to the Open state with probability \(P(0 \rightarrow 1 | x = 0) = \mu_{c2o}\). Likewise, If the ion channel is Open, it transitions to Closed with probability \(P(1 \rightarrow 0 | x=1) = \mu_{o2c}\).

We simulate the process of changing states as a Poisson process. You have seen the Poisson process in the pre-reqs statistics day. The Poisson process is a way to model discrete events where the average time between event occurrences is known but the exact time of some event is not known. Importantly, the Poisson process dictates the following points:

The probability of some event occurring is independent from all other events.

The average rate of events within a given time period is constant.

Two events cannot occur at the same moment. Our ion channel can either be in an open or closed state, but not both simultaneously.

In the simulation below, we will use the Poisson process to model the state of our ion channel at all points \(t\) within the total simulation time \(T\).

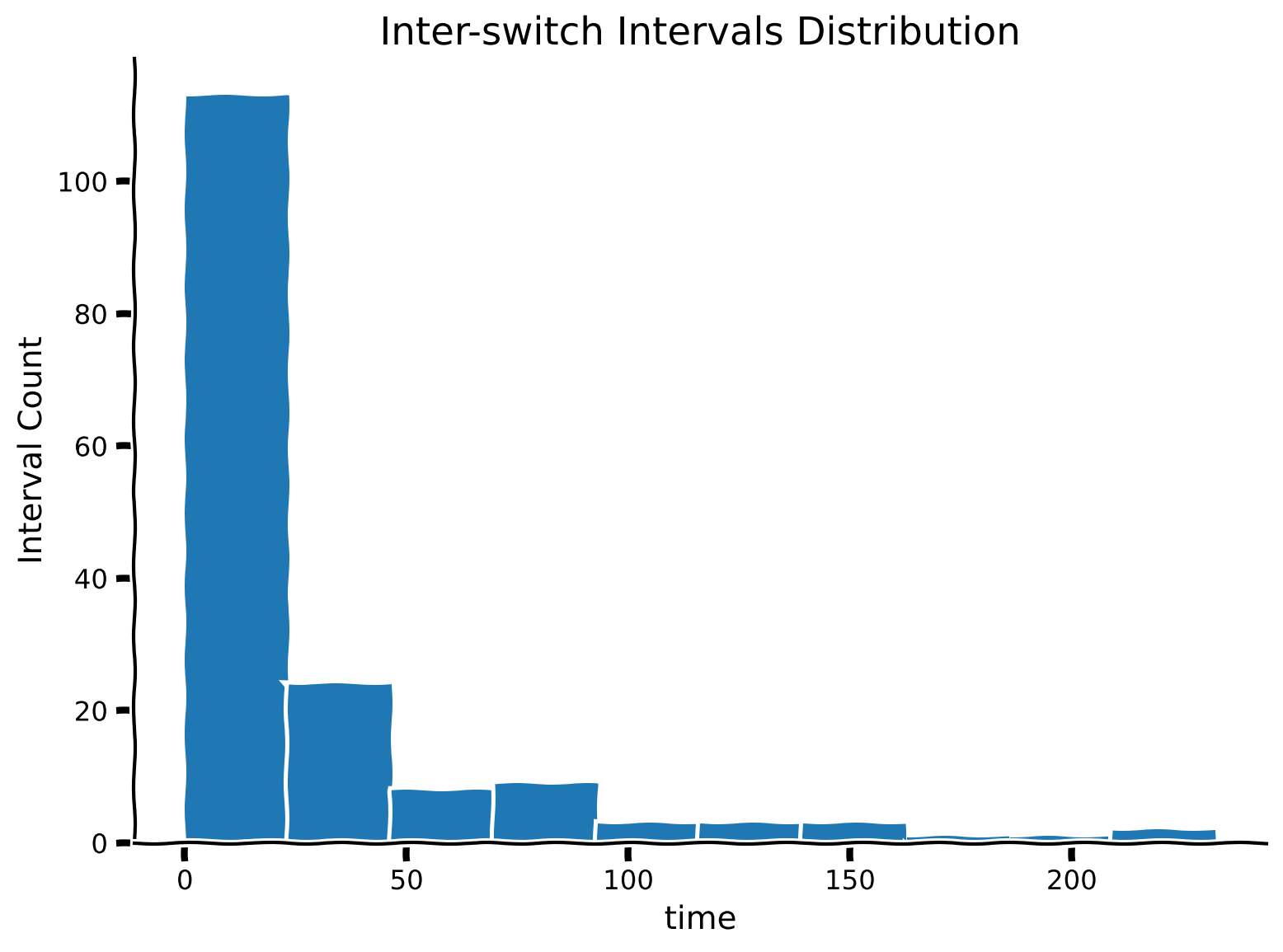

As we simulate the state change process, we also track at which times throughout the simulation the state makes a switch. We can use those times to measure the distribution of the time intervals between state switches.

You briefly saw a Markov process in the pre-reqs statistics day.

Run the cell below to show the state-change simulation process. Note that a random seed was set in the code block, so re-running the code will produce the same plot. Commenting out that line will produce a different simulation each run.

Execute to simulate state changes

# @markdown Execute to simulate state changes

# parameters

T = 5000 # total Time duration

dt = 0.001 # timestep of our simulation

# simulate state of our ion channel in time

# the two parameters that govern transitions are

# c2o: closed to open rate

# o2c: open to closed rate

def ion_channel_opening(c2o, o2c, T, dt):

# initialize variables

t = np.arange(0, T, dt)

x = np.zeros_like(t)

switch_times = []

# assume we always start in Closed state

x[0] = 0

# generate a bunch of random uniformly distributed numbers

# between zero and unity: [0, 1),

# one for each dt in our simulation.

# we will use these random numbers to model the

# closed/open transitions

myrand = np.random.random_sample(size=len(t))

# walk through time steps of the simulation

for k in range(len(t)-1):

# switching between closed/open states are

# Poisson processes

if x[k] == 0 and myrand[k] < c2o*dt: # remember to scale by dt!

x[k+1:] = 1

switch_times.append(k*dt)

elif x[k] == 1 and myrand[k] < o2c*dt:

x[k+1:] = 0

switch_times.append(k*dt)

return t, x, switch_times

c2o = 0.02

o2c = 0.1

np.random.seed(0) # set random seed

t, x, switch_times = ion_channel_opening(c2o, o2c, T, .1)

plot_switch_simulation(t,x)

Coding Exercise 1: Computing intervals between switches¶

Referred to in video as exercise 2A

We now have switch_times, which is a list consisting of times when the state switched. Using this, calculate the time intervals between each state switch and store these in a list called inter_switch_intervals.

We will then plot the distribution of these intervals. How would you describe the shape of the distribution?

##############################################################################

## TODO: Insert your code here to calculate between-state-switch intervals

raise NotImplementedError("Student exercise: need to calculate switch intervals")

##############################################################################

# hint: see np.diff()

inter_switch_intervals = ...

# plot inter-switch intervals

plot_interswitch_interval_histogram(inter_switch_intervals)

Example output:

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Computing_intervals_between_switches_Exercises")

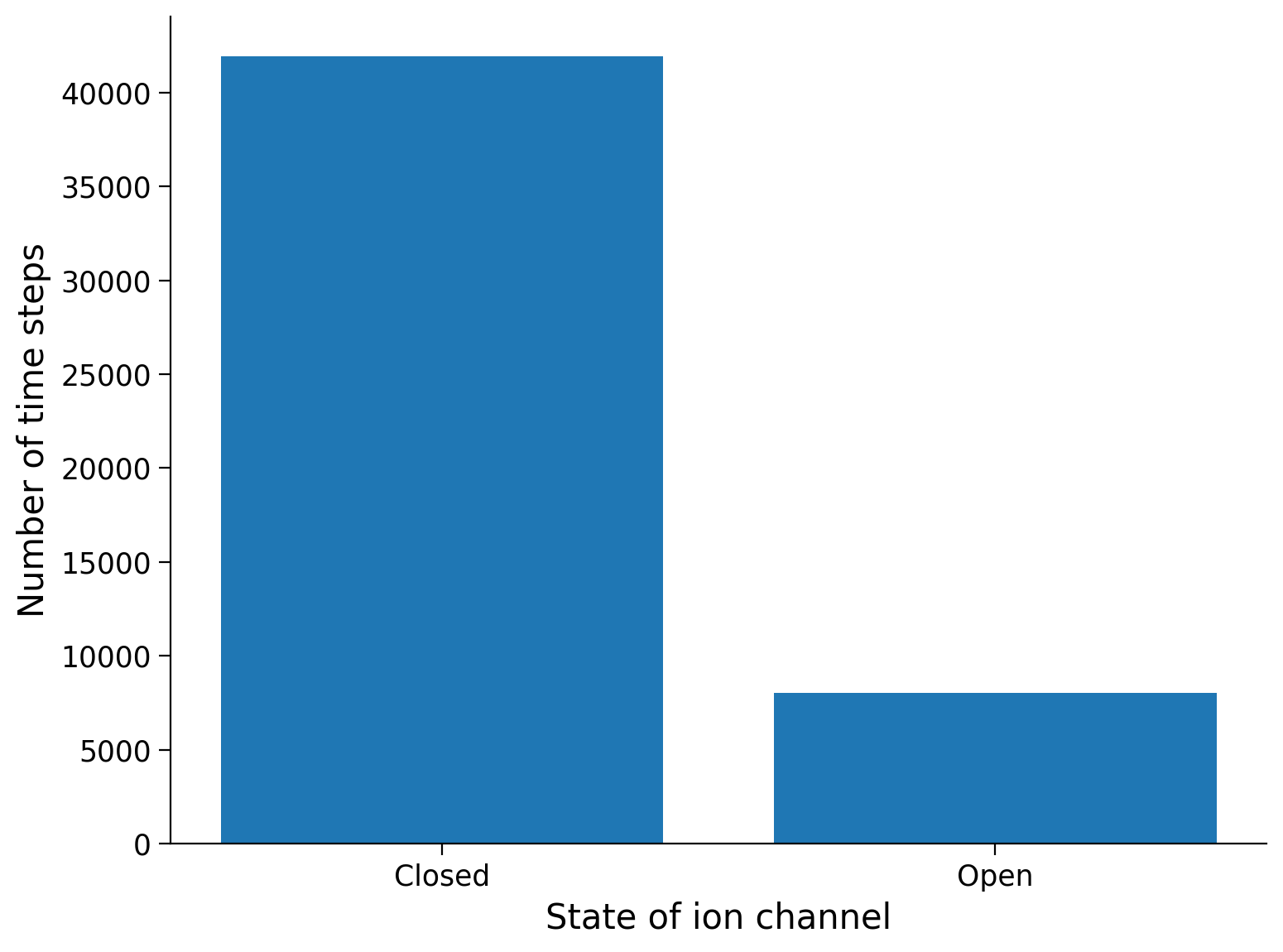

In the next cell, we generate a bar graph to visualize the distribution of the number of time-steps spent in each of the two possible system states during the simulation.

Execute cell to visualize distribution of time spent in each state.

# @markdown Execute cell to visualize distribution of time spent in each state.

states = ['Closed', 'Open']

(unique, counts) = np.unique(x, return_counts=True)

plt.bar(states, counts)

plt.ylabel('Number of time steps')

plt.xlabel('State of ion channel')

plt.show()

Even though the state is discrete–the ion channel can only be either Closed or Open–we can still look at the mean state of the system, averaged over some window of time.

Since we’ve coded Closed as \(x=0\) and Open as \(x=1\), conveniently, the mean of \(x\) over some window of time has the interpretation of fraction of time channel is Open.

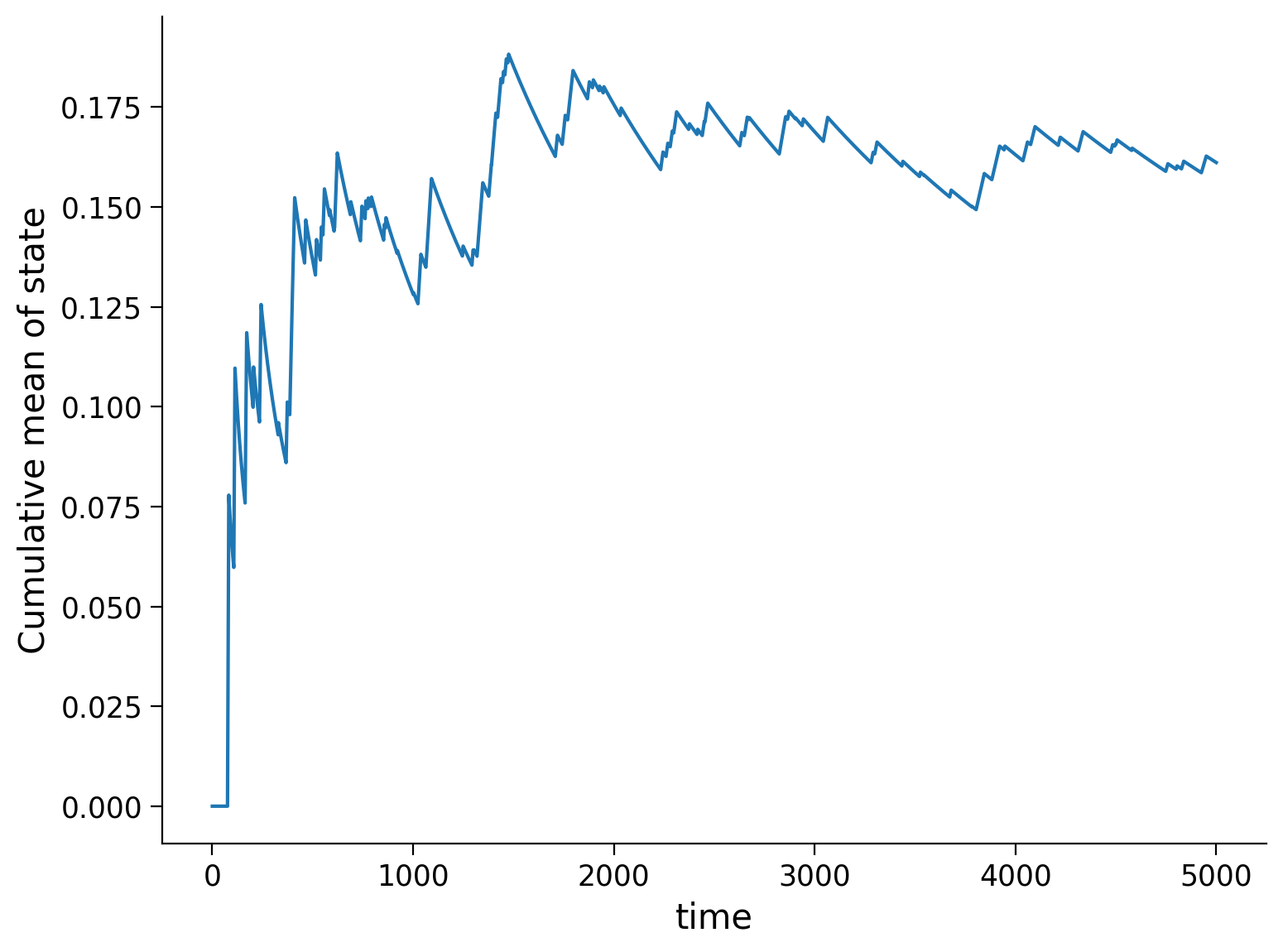

Let’s also take a look at the fraction of Open states as a cumulative mean of the state \(x\). The cumulative mean tells us the average number of state-changes that the system will have undergone after a certain amount of time.

Execute to visualize cumulative mean of state

# @markdown Execute to visualize cumulative mean of state

plt.plot(t, np.cumsum(x) / np.arange(1, len(t)+1))

plt.xlabel('time')

plt.ylabel('Cumulative mean of state')

plt.show()

Notice in the plot above that, although the channel started in the Closed (\(x=0\)) state, gradually adopted some mean value after some time. This mean value is related to the transition probabilities \(\mu_{c2o}\) and \(\mu_{o2c}\).

Interactive Demo 1: Varying transition probability values & \(T\)¶

Using the interactive demo below, explore the state-switch simulation for different transition probability values of states \(\mu_{c2o}\) and \(\mu_{o2c}\). Also, try different values for total simulation time length \(T\).

Does the general shape of the inter-switch interval distribution change or does it stay relatively the same?

How does the bar graph of system states change based on these values?

Make sure you execute this cell to enable the widget!

# @markdown Make sure you execute this cell to enable the widget!

@widgets.interact

def plot_inter_switch_intervals(c2o = (0,1, .01), o2c = (0, 1, .01),

T=(1000,10000, 1000)):

t, x, switch_times = ion_channel_opening(c2o, o2c, T, .1)

inter_switch_intervals = np.diff(switch_times)

#plot inter-switch intervals

plt.hist(inter_switch_intervals)

plt.title('Inter-switch Intervals Distribution')

plt.ylabel('Interval Count')

plt.xlabel('time')

plt.show()

plt.close()

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Varying_transition_probability_values_and_T_Interactive_Demo_and_Discussion")

Section 2: Distributional Perspective¶

Estimated timing to here from start of tutorial: 18 min

Video 2: State Transitions¶

This video serves as an introduction to the telegraph process of ion channels opening/closing with an alternative formulation as a matrix/vector representation of probabilistic state transitions.

Click here for text recap of video

We can run this simulation many times and gather empirical distributions of open/closed states. Alternatively, we can formulate the exact same system probabilistically, keeping track of the probability of being in each state.

(see diagram in lecture)

The same system of transitions can then be formulated using a vector of 2 elements as the state vector and a dynamics matrix \(\mathbf{A}\). The result of this formulation is a state transition matrix:

Each transition probability shown in the matrix is as follows:

\(1-\mu_{\text{c2o}}\), the probability that the closed state remains closed.

\(\mu_{\text{c2o}}\), the probability that the closed state transitions to the open state.

\(\mu_{\text{o2c}}\), the probability that the open state transitions to the closed state.

\(1-\mu_{\text{o2c}}\), the probability that the open state remains open.

Notice that this system is written as a discrete step in time, and \(\mathbf{A}\) describes the transition, mapping the state from step \(k\) to step \(k+1\). This is different from what we did in the exercises above where \(\mathbf{A}\) had described the function from the state to the time derivative of the state.

Coding Exercise 2: Probability Propagation¶

Referred to in video as exercise 2B

Complete the code below to simulate the propagation of probabilities of closed/open of the ion channel through time. A variable called x_kp1 (short for, \(x\) at timestep \(k\) plus 1) should be calculated per each step k in the loop. However, you should plot \(x\).

def simulate_prob_prop(A, x0, dt, T):

""" Simulate the propagation of probabilities given the transition matrix A,

with initial state x0, for a duration of T at timestep dt.

Args:

A (ndarray): state transition matrix

x0 (ndarray): state probabilities at time 0

dt (scalar): timestep of the simulation

T (scalar): total duration of the simulation

Returns:

ndarray, ndarray: `x` for all simulation steps and the time `t` at each step

"""

# Initialize variables

t = np.arange(0, T, dt)

x = x0 # x at time t_0

# Step through the system in time

for k in range(len(t)-1):

###################################################################

## TODO: Insert your code here to compute x_kp1 (x at k plus 1)

raise NotImplementedError("Student exercise: need to implement simulation")

## hint: use np.dot(a, b) function to compute the dot product

## of the transition matrix A and the last state in x

## hint 2: use np.vstack to append the latest state to x

###################################################################

# Compute the state of x at time k+1

x_kp1 = ...

# Stack (append) this new state onto x to keep track of x through time steps

x = ...

return x, t

# Set parameters

T = 500 # total Time duration

dt = 0.1 # timestep of our simulation

# same parameters as above

# c2o: closed to open rate

# o2c: open to closed rate

c2o = 0.02

o2c = 0.1

A = np.array([[1 - c2o*dt, o2c*dt],

[c2o*dt, 1 - o2c*dt]])

# Initial condition: start as Closed

x0 = np.array([[1, 0]])

# Simulate probabilities propagation

x, t = simulate_prob_prop(A, x0, dt, T)

# Visualize

plot_state_probabilities(t, x)

Example output:

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Probability_propagation_Exercise")

Here, we simulated the propagation of probabilities of the ion channel’s state changing through time. Using this method is useful in that we can run the simulation once and see how the probabilities propagate throughout time, rather than re-running and empirically observing the telegraph simulation over and over again.

Although the system started initially in the Closed (\(x=0\)) state, over time, it settles into a equilibrium distribution where we can predict what fraction of time it is Open as a function of the \(\mu\) parameters. We can say that the plot above shows this relaxation towards equilibrium.

Re-calculating our value of the probability of \(c2o\) again with this method, we see that this matches the simulation output from the telegraph process!

print(f"Probability of state c2o: {(c2o / (c2o + o2c)):.3f}")

Probability of state c2o: 0.167

Section 3: Equilibrium of the telegraph process¶

Estimated timing to here from start of tutorial: 30 min

Video 3: Continuous vs. Discrete Time Formulation¶

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Continuous_vs_Discrete_time_fromulation_Video")

Since we have now modeled the propagation of probabilities by the transition matrix \(\mathbf{A}\) in Section 2, let’s connect the behavior of the system at equilibrium with the eigendecomposition of \(\mathbf{A}\).

As introduced in the lecture video, the eigenvalues of \(\mathbf{A}\) tell us about the stability of the system, specifically in the directions of the corresponding eigenvectors.

# compute the eigendecomposition of A

lam, v = np.linalg.eig(A)

# print the 2 eigenvalues

print(f"Eigenvalues: {lam}")

# print the 2 eigenvectors

eigenvector1 = v[:, 0]

eigenvector2 = v[:, 1]

print(f"Eigenvector 1: {eigenvector1}")

print(f"Eigenvector 2: {eigenvector2}")

Eigenvalues: [1. 0.988]

Eigenvector 1: [0.98058068 0.19611614]

Eigenvector 2: [-0.70710678 0.70710678]

Think! 3: Finding a stable state¶

Which of these eigenvalues corresponds to the stable (equilibrium) solution?

What is the eigenvector of this eigenvalue?

How does that explain the equilibrium solutions in simulation in Section 2 of this tutorial?

hint: our simulation is written in terms of probabilities, so they must sum to 1. Therefore, you may also want to rescale the elements of the eigenvector such that they also sum to 1. These can then be directly compared with the probabilities of the states in the simulation.

Submit your feedback¶

# @title Submit your feedback

content_review(f"{feedback_prefix}_Finding_a_stable_state_Discussion")

Summary¶

Estimated timing of tutorial: 45 minutes

In this tutorial, we learned:

The definition of a Markov process with history dependence.

The behavior of a simple 2-state Markov process –the telegraph process– can be simulated either as a state-change simulation or as a propagation of probability distributions.

The relationship between the stability analysis of a dynamical system expressed either in continuous or discrete time.

The equilibrium behavior of a telegraph process is predictable and can be understood using the same strategy as for deterministic systems in Tutorial 1: by taking the eigendecomposition of the \(\mathbf{A}\) matrix.