Tutorial 3: Reconstructing Past Changes in Terrestrial Climate

Contents

Tutorial 3: Reconstructing Past Changes in Terrestrial Climate#

Week 1, Day 4, Paleoclimate

Content creators: Sloane Garelick

Content reviewers: Yosmely Bermúdez, Dionessa Biton, Katrina Dobson, Maria Gonzalez, Will Gregory, Nahid Hasan, Sherry Mi, Beatriz Cosenza Muralles, Brodie Pearson, Jenna Pearson, Chi Zhang, Ohad Zivan

Content editors: Yosmely Bermúdez, Zahra Khodakaramimaghsoud, Jenna Pearson, Agustina Pesce, Chi Zhang, Ohad Zivan

Production editors: Wesley Banfield, Jenna Pearson, Chi Zhang, Ohad Zivan

Our 2023 Sponsors: NASA TOPS and Google DeepMind

Tutorial Objectives#

In this tutorial, we’ll explore the Euro2K proxy network, which is a subset of PAGES2K, the database we explored in the first tutorial. We will specifically focus on interpreting temperature change over the past 2,000 years as recorded by proxy records from tree rings, speleothems, and lake sediments. To analyze these datasets, we will group them by archive and create time series plots to assess temperature variations.

During this tutorial you will:

Plot temperature records based on three different terrestrial proxies

Assess similarities and differences between the temperature records

Setup#

# installations ( uncomment and run this cell ONLY when using google colab or kaggle )

# !pip install LiPD

# !pip install cartopy

# !pip install pyleoclim

# imports

import pyleoclim as pyleo

import pandas as pd

import numpy as np

import os

import pooch

import tempfile

import matplotlib.pyplot as plt

import cartopy

import cartopy.crs as ccrs

import cartopy.feature as cfeature

from io import StringIO

import sys

# @title Helper functions

def pooch_load(filelocation=None, filename=None, processor=None):

# this is different for each day

shared_location = "/home/jovyan/shared/Data/tutorials/W1D4_Paleoclimate"

user_temp_cache = tempfile.gettempdir()

if os.path.exists(os.path.join(shared_location, filename)):

file = os.path.join(shared_location, filename)

else:

file = pooch.retrieve(

filelocation,

known_hash=None,

fname=os.path.join(user_temp_cache, filename),

processor=processor,

)

return file

class SupressOutputs(list):

def __enter__(self):

self._stdout = sys.stdout

sys.stdout = self._stringio = StringIO()

return self

def __exit__(self, *args):

self.extend(self._stringio.getvalue().splitlines())

del self._stringio # free up some memory

sys.stdout = self._stdout

# @title Video 1: Terrestrial Climate Proxies

from ipywidgets import widgets

from IPython.display import YouTubeVideo

from IPython.display import IFrame

from IPython.display import display

class PlayVideo(IFrame):

def __init__(self, id, source, page=1, width=400, height=300, **kwargs):

self.id = id

if source == "Bilibili":

src = f"https://player.bilibili.com/player.html?bvid={id}&page={page}"

elif source == "Osf":

src = f"https://mfr.ca-1.osf.io/render?url=https://osf.io/download/{id}/?direct%26mode=render"

super(PlayVideo, self).__init__(src, width, height, **kwargs)

def display_videos(video_ids, W=400, H=300, fs=1):

tab_contents = []

for i, video_id in enumerate(video_ids):

out = widgets.Output()

with out:

if video_ids[i][0] == "Youtube":

video = YouTubeVideo(

id=video_ids[i][1], width=W, height=H, fs=fs, rel=0

)

print(f"Video available at https://youtube.com/watch?v={video.id}")

else:

video = PlayVideo(

id=video_ids[i][1],

source=video_ids[i][0],

width=W,

height=H,

fs=fs,

autoplay=False,

)

if video_ids[i][0] == "Bilibili":

print(

f"Video available at https://www.bilibili.com/video/{video.id}"

)

elif video_ids[i][0] == "Osf":

print(f"Video available at https://osf.io/{video.id}")

display(video)

tab_contents.append(out)

return tab_contents

video_ids = [("Youtube", "wU2gNOYOXrQ"), ("Bilibili", "BV1s94y1B7Yx")]

tab_contents = display_videos(video_ids, W=730, H=410)

tabs = widgets.Tab()

tabs.children = tab_contents

for i in range(len(tab_contents)):

tabs.set_title(i, video_ids[i][0])

display(tabs)

Section 1: Loading Terrestrial Paleoclimate Records#

First, we need to download the data. Similar to Tutorial 1, the data is stored as a LiPD file, and we will be using Pyleoclim to format and interpret the data.

# set the name to save the Euro2k data

fname = "euro2k_data"

# download the data

lipd_file_path = pooch.retrieve(

url="https://osf.io/7ezp3/download/",

known_hash=None,

path="./",

fname=fname,

processor=pooch.Unzip(),

)

# the LiPD object can be used to load datasets stored in the LiPD format.

# in this first case study, we will load an entire library of LiPD files:

with SupressOutputs():

d_euro = pyleo.Lipd(os.path.join(".", f"{fname}.unzip", "Euro2k"))

/tmp/ipykernel_389730/2101573595.py:4: DeprecationWarning: The Lipd class is being deprecated and will be removed in Pyleoclim v1.0.0. Functionalities will instead be handled by the pyLipd package.

d_euro = pyleo.Lipd(os.path.join(".", f"{fname}.unzip", "Euro2k"))

Section 2: Temperature Reconstructions#

Before plotting, let’s narrow the data down a bit. We can filter all of the data so that we only keep reconstructions of temperature from terrestrial archives (e.g. tree rings, speleothems and lake sediments). This is accomplished with the function below.

def filter_data(dataset, archive_type, variable_name):

"""

Return a MultipleSeries object with the variable record (variable_name) for a given archive_type and coordinates.

"""

# Create a list of dictionary that can be iterated upon using Lipd.to_tso method

ts_list = dataset.to_tso()

# Append the correct indices for a given value of archive_type and variable_name

indices = []

lat = []

lon = []

for idx, item in enumerate(ts_list):

# Check that it is available to avoid errors on the loop

if "archiveType" in item.keys():

# If it's a archive_type, then proceed to the next step

if item["archiveType"] == archive_type:

if item["paleoData_variableName"] == variable_name:

indices.append(idx)

print(indices)

# Create a list of LipdSeries for the given indices

ts_list_archive_type = []

for indice in indices:

ts_list_archive_type.append(pyleo.LipdSeries(ts_list[indice]))

# save lat and lons of proxies

lat.append(ts_list[indice]["geo_meanLat"])

lon.append(ts_list[indice]["geo_meanLon"])

return pyleo.MultipleSeries(ts_list_archive_type), lat, lon

In the function above, the Lipd.to_tso method is used to obtain a list of dictionaries that can be iterated upon.

ts_list = d_euro.to_tso()

extracting paleoData...

extracting: Eur-Lötschental.Büntgen.2006

extracting: Eur-TatraMountains.Büntgen.2013

extracting: Ocn-AqabaJordanAQ18.Heiss.1999

extracting: Eur-SpannagelCave.Mangini.2005

extracting: Arc-Tjeggelvas.Bjorklund.2012

extracting: Arc-Indigirka.Hughes.1999

extracting: Eur-LakeSilvaplana.Trachsel.2010

extracting: Eur-RAPiD-17-5P.Moffa-Sanchez.2014

extracting: Eur-CentralEurope.Dobrovolný.2009

extracting: Eur-CoastofPortugal.Abrantes.2011

extracting: Eur-MaritimeFrenchAlps.Büntgen.2012

extracting: Arc-AkademiiNaukIceCap.Opel.2013

extracting: Arc-GulfofAlaska.Wilson.2014

extracting: Eur-SpanishPyrenees.Dorado-Linan.2012

extracting: Eur-NorthernSpain.Martín-Chivelet.2011

extracting: Eur-FinnishLakelands.Helama.2014

extracting: Arc-PolarUrals.Wilson.2015

extracting: Ocn-AqabaJordanAQ19.Heiss.1999

extracting: Eur-EasternCarpathianMountains.Popa.2008

extracting: Eur-Seebergsee.Larocque-Tobler.2012

extracting: Arc-Forfjorddalen.McCarroll.2013

extracting: Eur-Stockholm.Leijonhufvud.2009

extracting: Eur-NorthernScandinavia.Esper.2012

extracting: Eur-Tallinn.Tarand.2001

extracting: Eur-EuropeanAlps.Büntgen.2011

extracting: Arc-Jamtland.Wilson.2016

extracting: Eur-CentralandEasternPyrenees.Pla.2004

extracting: Arc-Kittelfjall.Bjorklund.2012

extracting: Arc-Tornetrask.Melvin.2012

extracting: Eur-LakeSilvaplana.Larocque-Tobler.2010

extracting: Ocn-RedSea.Felis.2000

Created time series: 73 entries

Dictionaries are native to Python and can be explored as shown below.

# look at available entries for just one time-series

ts_list[0].keys()

dict_keys(['mode', 'time_id', '@context', 'archiveType', 'createdBy', 'dataSetName', 'googleDataURL', 'googleMetadataWorksheet', 'googleSpreadSheetKey', 'originalDataURL', 'tagMD5', 'pub1_author', 'pub1_citeKey', 'pub1_dataUrl', 'pub1_journal', 'pub1_pages', 'pub1_publisher', 'pub1_title', 'pub1_type', 'pub1_volume', 'pub1_year', 'pub1_doi', 'pub2_author', 'pub2_Urldate', 'pub2_citeKey', 'pub2_institution', 'pub2_title', 'pub2_type', 'pub2_url', 'geo_type', 'geo_meanLon', 'geo_meanLat', 'geo_meanElev', 'geo_pages2kRegion', 'geo_siteName', 'lipdVersion', 'tableType', 'paleoData_paleoDataTableName', 'paleoData_paleoDataMD5', 'paleoData_googleWorkSheetKey', 'paleoData_measurementTableName', 'paleoData_measurementTableMD5', 'paleoData_filename', 'paleoData_tableName', 'paleoData_missingValue', 'year', 'yearUnits', 'paleoData_QCCertification', 'paleoData_QCnotes', 'paleoData_TSid', 'paleoData_WDSPaleoUrl', 'paleoData_archiveType', 'paleoData_hasMaxValue', 'paleoData_hasMeanValue', 'paleoData_hasMedianValue', 'paleoData_hasMinValue', 'paleoData_inCompilation', 'paleoData_pages2kID', 'paleoData_paleoMeasurementTableMD5', 'paleoData_proxy', 'paleoData_proxyObservationType', 'paleoData_units', 'paleoData_useInGlobalTemperatureAnalysis', 'paleoData_variableName', 'paleoData_variableType', 'paleoData_hasResolution_hasMinValue', 'paleoData_hasResolution_hasMaxValue', 'paleoData_hasResolution_hasMeanValue', 'paleoData_hasResolution_hasMedianValue', 'paleoData_interpretation', 'paleoData_number', 'paleoData_values'])

# print relevant information for all entries

for idx, item in enumerate(ts_list):

print(str(idx) + ": " + item["dataSetName"] +

": " + item["paleoData_variableName"])

0: Eur-Lötschental.Büntgen.2006: MXD

1: Eur-Lötschental.Büntgen.2006: year

2: Eur-TatraMountains.Büntgen.2013: trsgi

3: Eur-TatraMountains.Büntgen.2013: year

4: Ocn-AqabaJordanAQ18.Heiss.1999: d18O

5: Ocn-AqabaJordanAQ18.Heiss.1999: year

6: Ocn-AqabaJordanAQ18.Heiss.1999: d13C

7: Eur-SpannagelCave.Mangini.2005: d18O

8: Eur-SpannagelCave.Mangini.2005: year

9: Arc-Tjeggelvas.Bjorklund.2012: density

10: Arc-Tjeggelvas.Bjorklund.2012: year

11: Arc-Indigirka.Hughes.1999: trsgi

12: Arc-Indigirka.Hughes.1999: year

13: Eur-LakeSilvaplana.Trachsel.2010: temperature

14: Eur-LakeSilvaplana.Trachsel.2010: year

15: Eur-RAPiD-17-5P.Moffa-Sanchez.2014: d18O

16: Eur-RAPiD-17-5P.Moffa-Sanchez.2014: year

17: Eur-CentralEurope.Dobrovolný.2009: temperature

18: Eur-CentralEurope.Dobrovolný.2009: year

19: Eur-CoastofPortugal.Abrantes.2011: temperature

20: Eur-CoastofPortugal.Abrantes.2011: year

21: Eur-MaritimeFrenchAlps.Büntgen.2012: trsgi

22: Eur-MaritimeFrenchAlps.Büntgen.2012: year

23: Arc-AkademiiNaukIceCap.Opel.2013: thickness

24: Arc-AkademiiNaukIceCap.Opel.2013: year

25: Arc-AkademiiNaukIceCap.Opel.2013: age

26: Arc-AkademiiNaukIceCap.Opel.2013: d18O

27: Arc-AkademiiNaukIceCap.Opel.2013: Na

28: Arc-GulfofAlaska.Wilson.2014: temperature

29: Arc-GulfofAlaska.Wilson.2014: year

30: Eur-SpanishPyrenees.Dorado-Linan.2012: trsgi

31: Eur-SpanishPyrenees.Dorado-Linan.2012: year

32: Eur-NorthernSpain.Martín-Chivelet.2011: d18O

33: Eur-NorthernSpain.Martín-Chivelet.2011: year

34: Eur-FinnishLakelands.Helama.2014: temperature

35: Eur-FinnishLakelands.Helama.2014: year

36: Arc-PolarUrals.Wilson.2015: density

37: Arc-PolarUrals.Wilson.2015: year

38: Ocn-AqabaJordanAQ19.Heiss.1999: d18O

39: Ocn-AqabaJordanAQ19.Heiss.1999: year

40: Ocn-AqabaJordanAQ19.Heiss.1999: d13C

41: Eur-EasternCarpathianMountains.Popa.2008: trsgi

42: Eur-EasternCarpathianMountains.Popa.2008: year

43: Eur-Seebergsee.Larocque-Tobler.2012: temperature

44: Eur-Seebergsee.Larocque-Tobler.2012: year

45: Arc-Forfjorddalen.McCarroll.2013: MXD

46: Arc-Forfjorddalen.McCarroll.2013: year

47: Eur-Stockholm.Leijonhufvud.2009: temperature

48: Eur-Stockholm.Leijonhufvud.2009: year

49: Eur-NorthernScandinavia.Esper.2012: MXD

50: Eur-NorthernScandinavia.Esper.2012: year

51: Eur-Tallinn.Tarand.2001: temperature

52: Eur-Tallinn.Tarand.2001: year

53: Eur-Tallinn.Tarand.2001: JulianDay

54: Eur-EuropeanAlps.Büntgen.2011: trsgi

55: Eur-EuropeanAlps.Büntgen.2011: year

56: Arc-Jamtland.Wilson.2016: MXD

57: Arc-Jamtland.Wilson.2016: year

58: Eur-CentralandEasternPyrenees.Pla.2004: sampleID

59: Eur-CentralandEasternPyrenees.Pla.2004: year

60: Eur-CentralandEasternPyrenees.Pla.2004: age

61: Eur-CentralandEasternPyrenees.Pla.2004: temperature

62: Eur-CentralandEasternPyrenees.Pla.2004: uncertainty_temperature

63: Arc-Kittelfjall.Bjorklund.2012: density

64: Arc-Kittelfjall.Bjorklund.2012: year

65: Arc-Tornetrask.Melvin.2012: temperature

66: Arc-Tornetrask.Melvin.2012: year

67: Arc-Tornetrask.Melvin.2012: temperature

68: Arc-Tornetrask.Melvin.2012: sampleDensity

69: Eur-LakeSilvaplana.Larocque-Tobler.2010: temperature

70: Eur-LakeSilvaplana.Larocque-Tobler.2010: year

71: Ocn-RedSea.Felis.2000: d18O

72: Ocn-RedSea.Felis.2000: year

Now let’s use our pre-defined function to create a new list that only has temperature reconstructions based on proxies from lake sediments:

ms_euro_lake, euro_lake_lat, euro_lake_lon = filter_data(

d_euro, "lake sediment", "temperature"

)

extracting paleoData...

extracting: Eur-Lötschental.Büntgen.2006

extracting: Eur-TatraMountains.Büntgen.2013

extracting: Ocn-AqabaJordanAQ18.Heiss.1999

extracting: Eur-SpannagelCave.Mangini.2005

extracting: Arc-Tjeggelvas.Bjorklund.2012

extracting: Arc-Indigirka.Hughes.1999

extracting: Eur-LakeSilvaplana.Trachsel.2010

extracting: Eur-RAPiD-17-5P.Moffa-Sanchez.2014

extracting: Eur-CentralEurope.Dobrovolný.2009

extracting: Eur-CoastofPortugal.Abrantes.2011

extracting: Eur-MaritimeFrenchAlps.Büntgen.2012

extracting: Arc-AkademiiNaukIceCap.Opel.2013

extracting: Arc-GulfofAlaska.Wilson.2014

extracting: Eur-SpanishPyrenees.Dorado-Linan.2012

extracting: Eur-NorthernSpain.Martín-Chivelet.2011

extracting: Eur-FinnishLakelands.Helama.2014

extracting: Arc-PolarUrals.Wilson.2015

extracting: Ocn-AqabaJordanAQ19.Heiss.1999

extracting: Eur-EasternCarpathianMountains.Popa.2008

extracting: Eur-Seebergsee.Larocque-Tobler.2012

extracting: Arc-Forfjorddalen.McCarroll.2013

extracting: Eur-Stockholm.Leijonhufvud.2009

extracting: Eur-NorthernScandinavia.Esper.2012

extracting: Eur-Tallinn.Tarand.2001

extracting: Eur-EuropeanAlps.Büntgen.2011

extracting: Arc-Jamtland.Wilson.2016

extracting: Eur-CentralandEasternPyrenees.Pla.2004

extracting: Arc-Kittelfjall.Bjorklund.2012

extracting: Arc-Tornetrask.Melvin.2012

extracting: Eur-LakeSilvaplana.Larocque-Tobler.2010

extracting: Ocn-RedSea.Felis.2000

Created time series: 73 entries

[13, 43, 61, 69]

Both age and year information are available, using age

/tmp/ipykernel_389730/2936504408.py:22: DeprecationWarning: The LipdSeries class is being deprecated and will be removed in Pyleoclim v1.0.0. It will be replaced by the geoSeries class (currently in development).

ts_list_archive_type.append(pyleo.LipdSeries(ts_list[indice]))

And a new list that only has temperature reconstructions based on proxies from tree rings:

ms_euro_tree, euro_tree_lat, euro_tree_lon = filter_data(

d_euro, "tree", "temperature")

extracting paleoData...

extracting: Eur-Lötschental.Büntgen.2006

extracting: Eur-TatraMountains.Büntgen.2013

extracting: Ocn-AqabaJordanAQ18.Heiss.1999

extracting: Eur-SpannagelCave.Mangini.2005

extracting: Arc-Tjeggelvas.Bjorklund.2012

extracting: Arc-Indigirka.Hughes.1999

extracting: Eur-LakeSilvaplana.Trachsel.2010

extracting: Eur-RAPiD-17-5P.Moffa-Sanchez.2014

extracting: Eur-CentralEurope.Dobrovolný.2009

extracting: Eur-CoastofPortugal.Abrantes.2011

extracting: Eur-MaritimeFrenchAlps.Büntgen.2012

extracting: Arc-AkademiiNaukIceCap.Opel.2013

extracting: Arc-GulfofAlaska.Wilson.2014

extracting: Eur-SpanishPyrenees.Dorado-Linan.2012

extracting: Eur-NorthernSpain.Martín-Chivelet.2011

extracting: Eur-FinnishLakelands.Helama.2014

extracting: Arc-PolarUrals.Wilson.2015

extracting: Ocn-AqabaJordanAQ19.Heiss.1999

extracting: Eur-EasternCarpathianMountains.Popa.2008

extracting: Eur-Seebergsee.Larocque-Tobler.2012

extracting: Arc-Forfjorddalen.McCarroll.2013

extracting: Eur-Stockholm.Leijonhufvud.2009

extracting: Eur-NorthernScandinavia.Esper.2012

extracting: Eur-Tallinn.Tarand.2001

extracting: Eur-EuropeanAlps.Büntgen.2011

extracting: Arc-Jamtland.Wilson.2016

extracting: Eur-CentralandEasternPyrenees.Pla.2004

extracting: Arc-Kittelfjall.Bjorklund.2012

extracting: Arc-Tornetrask.Melvin.2012

extracting: Eur-LakeSilvaplana.Larocque-Tobler.2010

extracting: Ocn-RedSea.Felis.2000

Created time series: 73 entries

[28, 34, 65, 67]

/tmp/ipykernel_389730/2936504408.py:22: DeprecationWarning: The LipdSeries class is being deprecated and will be removed in Pyleoclim v1.0.0. It will be replaced by the geoSeries class (currently in development).

ts_list_archive_type.append(pyleo.LipdSeries(ts_list[indice]))

And finally, a new list that only has temperature information based on proxies from speleothems:

ms_euro_spel, euro_spel_lat, euro_spel_lon = filter_data(

d_euro, "speleothem", "d18O")

extracting paleoData...

extracting: Eur-Lötschental.Büntgen.2006

extracting: Eur-TatraMountains.Büntgen.2013

extracting: Ocn-AqabaJordanAQ18.Heiss.1999

extracting: Eur-SpannagelCave.Mangini.2005

extracting: Arc-Tjeggelvas.Bjorklund.2012

extracting: Arc-Indigirka.Hughes.1999

extracting: Eur-LakeSilvaplana.Trachsel.2010

extracting: Eur-RAPiD-17-5P.Moffa-Sanchez.2014

extracting: Eur-CentralEurope.Dobrovolný.2009

extracting: Eur-CoastofPortugal.Abrantes.2011

extracting: Eur-MaritimeFrenchAlps.Büntgen.2012

extracting: Arc-AkademiiNaukIceCap.Opel.2013

extracting: Arc-GulfofAlaska.Wilson.2014

extracting: Eur-SpanishPyrenees.Dorado-Linan.2012

extracting: Eur-NorthernSpain.Martín-Chivelet.2011

extracting: Eur-FinnishLakelands.Helama.2014

extracting: Arc-PolarUrals.Wilson.2015

extracting: Ocn-AqabaJordanAQ19.Heiss.1999

extracting: Eur-EasternCarpathianMountains.Popa.2008

extracting: Eur-Seebergsee.Larocque-Tobler.2012

extracting: Arc-Forfjorddalen.McCarroll.2013

extracting: Eur-Stockholm.Leijonhufvud.2009

extracting: Eur-NorthernScandinavia.Esper.2012

extracting: Eur-Tallinn.Tarand.2001

extracting: Eur-EuropeanAlps.Büntgen.2011

extracting: Arc-Jamtland.Wilson.2016

extracting: Eur-CentralandEasternPyrenees.Pla.2004

extracting: Arc-Kittelfjall.Bjorklund.2012

extracting: Arc-Tornetrask.Melvin.2012

extracting: Eur-LakeSilvaplana.Larocque-Tobler.2010

extracting: Ocn-RedSea.Felis.2000

Created time series: 73 entries

[7, 32]

/tmp/ipykernel_389730/2936504408.py:22: DeprecationWarning: The LipdSeries class is being deprecated and will be removed in Pyleoclim v1.0.0. It will be replaced by the geoSeries class (currently in development).

ts_list_archive_type.append(pyleo.LipdSeries(ts_list[indice]))

Coding Exercises 2#

Using the coordinate information output from the filter_data() function, make a plot of the locations of the proxies using the markers and colors from Tutorial 1. Note that data close together may not be very visible.

# initate plot with the specific figure size

fig = plt.figure(figsize=(9, 6))

# set base map projection

ax = plt.axes(projection=ccrs.Robinson())

ax.set_global()

# add land fratures using gray color

ax.add_feature(cfeature.LAND, facecolor="k")

# add coastlines

ax.add_feature(cfeature.COASTLINE)

# add the proxy locations

# Uncomment and complete following line

# _ = ax.scatter(

# ...,

# ...,

# transform=ccrs.Geodetic(),

# label=...,

# s=50,

# marker="d",

# color=[0.52734375, 0.8046875, 0.97916667],

# edgecolor="k",

# zorder=2,

# )

# _ = ax.scatter(

# ...,

# ...,

# transform=ccrs.Geodetic(),

# label=...,

# s=50,

# marker="p",

# color=[0.73828125, 0.71484375, 0.41796875],

# edgecolor="k",

# zorder=2,

# )

# _ = ax.scatter(

# ...,

# ...,

# transform=ccrs.Geodetic(),

# label=...,

# s=50,

# marker="8",

# color=[1, 0, 0],

# edgecolor="k",

# zorder=2,

# )

# change the map view to zoom in on central Pacific

ax.set_extent((0, 360, 0, 90), crs=ccrs.PlateCarree())

ax.legend(

scatterpoints=1,

bbox_to_anchor=(0, -0.4),

loc="lower left",

ncol=3,

fontsize=15,

)

<matplotlib.legend.Legend at 0x7fd5b5653fa0>

Since we are going to compare temperature datasets based on different terrestrial climate archives (lake sediments, tree rings and speleothems), the quantitative values of the measurements in each record will differ (i.e., the lake sediment and tree ring data are temperature in degrees C, but the speleothem data is oxygen isotopes in per mille). Therefore, to more easily and accurately compare temperature between the records, it’s helpful to standardize the data as we did in tutorial 2. The .standardize() function removes the estimated mean of the time series and divides by its estimated standard deviation.

# standardize the data

spel_stnd = ms_euro_spel.standardize()

lake_stnd = ms_euro_lake.standardize()

tree_stnd = ms_euro_tree.standardize()

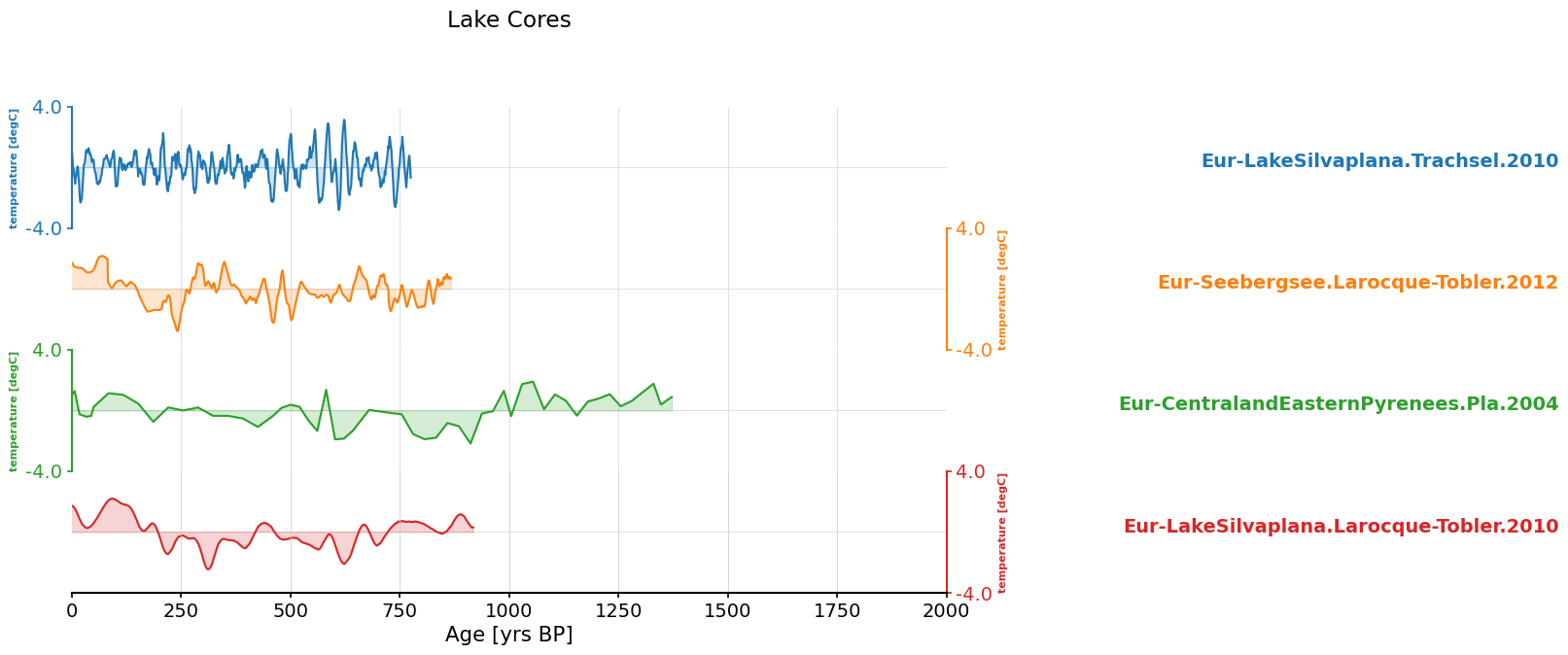

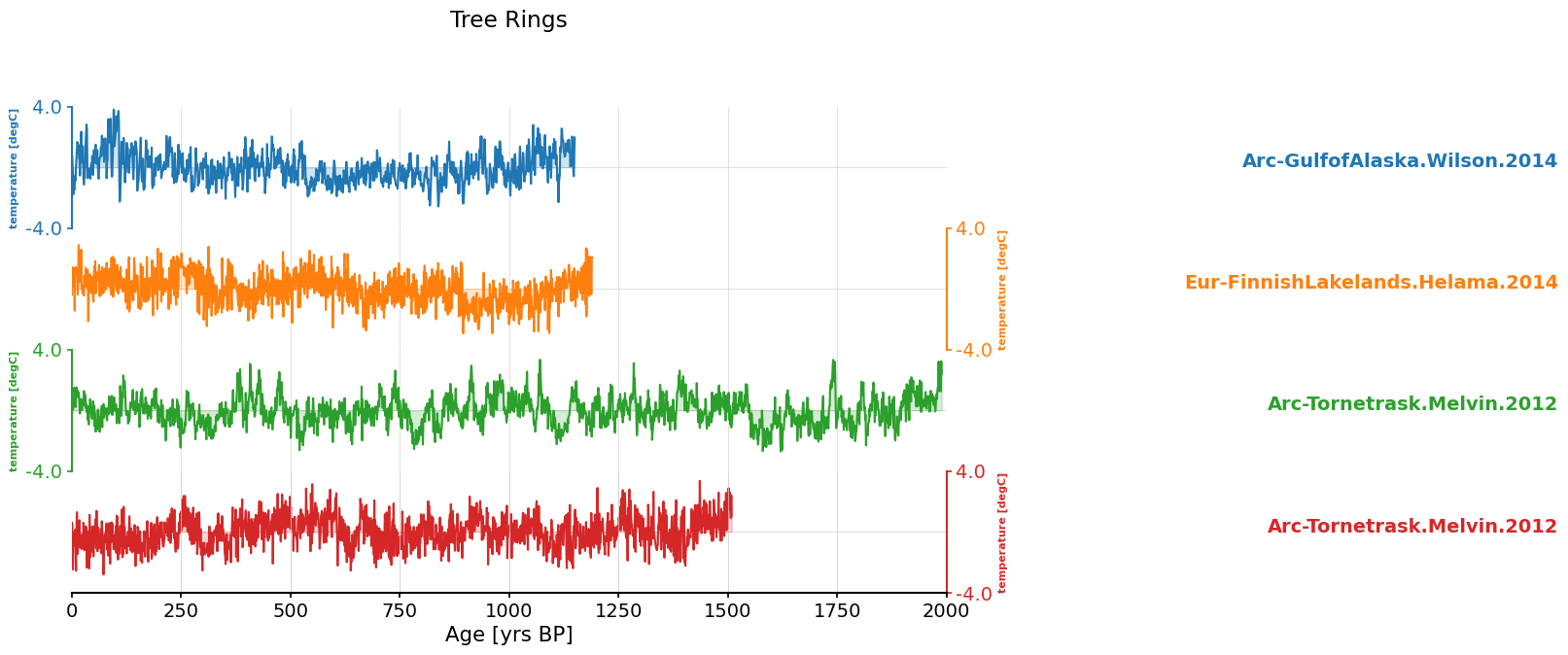

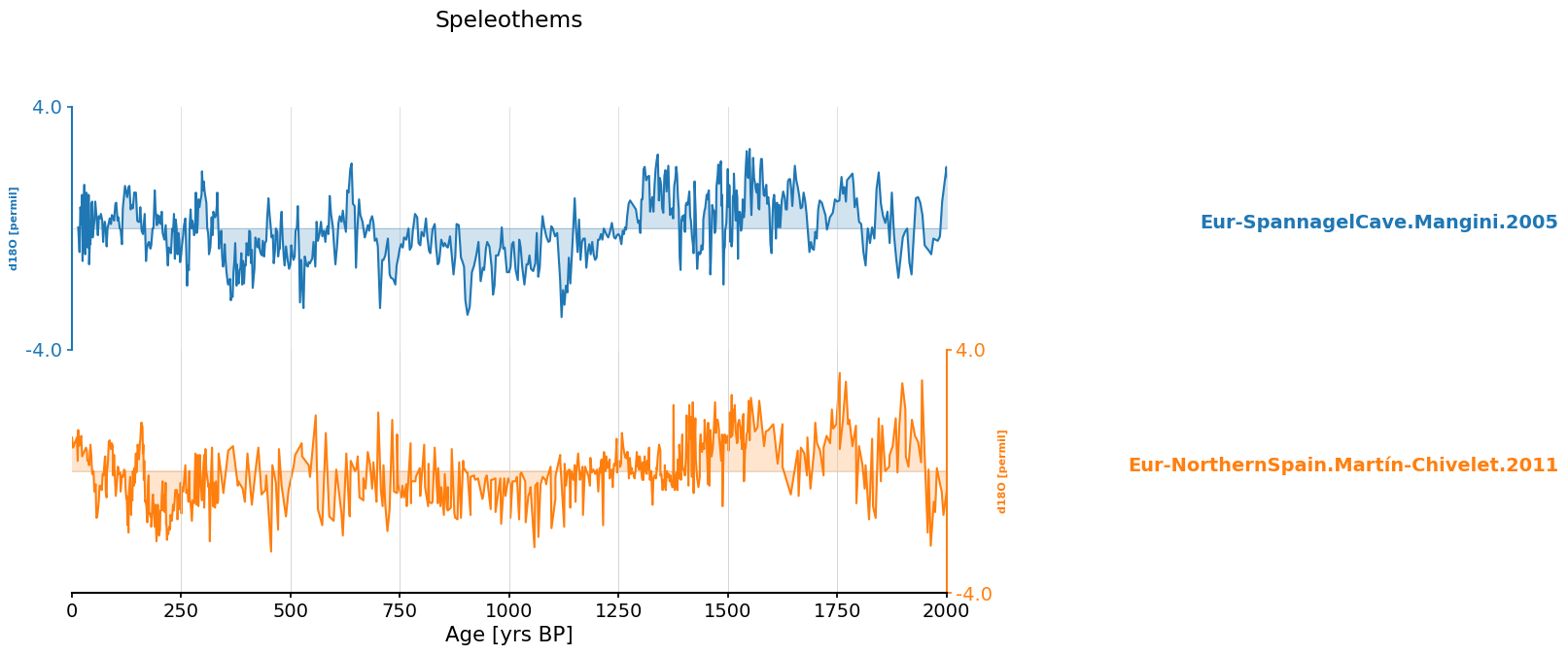

Now we can use Pyleoclim functions to create three stacked plots of this data with lake sediment records on top, tree ring reconstructions in the middle and speleothem records on the bottom.

Note that the colors used for the time series in each plot are the default colors generated by the function, so the corresponding colors in each of the three plots are not relevant.

# note the x axis is years before present, so read from left to right moving back in time

ax = lake_stnd.stackplot(

label_x_loc=1.7,

xlim=[0, 2000],

v_shift_factor=1,

figsize=[9, 5],

time_unit="yrs BP",

)

ax[0].suptitle("Lake Cores", y=1.2)

ax = tree_stnd.stackplot(

label_x_loc=1.7,

xlim=[0, 2000],

v_shift_factor=1,

figsize=[9, 5],

time_unit="yrs BP",

)

ax[0].suptitle("Tree Rings", y=1.2)

# recall d18O is a proxy for SST, and that more positive d18O means colder SST

ax = spel_stnd.stackplot(

label_x_loc=1.7,

xlim=[0, 2000],

v_shift_factor=1,

figsize=[9, 5],

time_unit="yrs BP",

)

ax[0].suptitle("Speleothems", y=1.2)

Text(0.5, 1.2, 'Speleothems')

Questions 2#

Using the plots we just made (and recalling that all of these records are from Europe), let’s make some inferences about the temperature data over the past 2,000 years:

Recall that δ18O is a proxy for SST, and that more positive δ18O means colder SST. Do the temperature records based on a single proxy type record similar patterns?

Do the three proxy types collectively record similar patterns?

What might be causing the more frequent variations in temperature?

Summary#

In this tutorial, we explored how to use the Euro2k proxy network to investigate changes in temperature over the past 2,000 years from tree rings, speleothems, and lake sediments. To analyze these diverse datasets, we categorized them based on their archive type and constructed time series plots.

Resources#

Code for this tutorial is based on an existing notebook from LinkedEarth that provides instruction on working with LiPD files.

Data from the following sources are used in this tutorial:

Euro2k database: PAGES2k Consortium., Emile-Geay, J., McKay, N. et al. A global multiproxy database for temperature reconstructions of the Common Era. Sci Data 4, 170088 (2017). https://doi.org/10.1038/sdata.2017.88